AUCTORES

Globalize your Research

Research Article | DOI: https://doi.org/10.31579/2690-4861/311

1 Department of Information Science, Faculty of Computing and Informatics, Jimma Institute of Technology, Jimma University, Oromia, Ethiopia.

2 Department of Epidemiology, Jimma Institute of Health, Jimma University, Oromia, Ethiopia.

*Corresponding Author: Chala Diriba, Gülhane Department of Information Science, Faculty of Computing and Informatics, Jimma Institute of Technology, Jimma University, Oromia, Ethiopia.

Citation: Diriba C., Jimma W., Merga H., (2023), Developing Risk Level Prediction Model and Clinical Decision Support System for Cardiovascular Diseases in Ethiopia, International Journal of Clinical Case Reports and Reviews, 13(5); DOI: 10.31579/2690-4861/311

Copyright: © 2023, Chala Diriba. This is an open-access article distributed under the terms of the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original author and source are credited.

Received: 02 May 2023 | Accepted: 15 May 2023 | Published: 23 May 2023

Keywords: cardiovascular diseases; fuzzy logic; data mining; decision support system

Cardiovascular diseases have become one of the severe health problems in both developing and developed countries. This research aimed to develop a risk level prediction model and clinical decision support system for CVD in Ethiopia using data mining techniques. A total of 4004 datasets were used to develop the model. Moreover, primary data was collected from the domain experts via interviews and questionnaires. The domain experts identified thirty-one risk factors, of which only eleven attributes were selected after experimentation to develop the model. Based on the result of experimentation, the model was developed by an unpruned J48 classifier algorithm which produced F-Measure 0.877, which is comparatively the best algorithm. The prototype system was developed by Visual C# studio tool. The developed prototype system helps health care providers to identify risk level CVD diseases. It was developed using a data mining technique, which can efficiently predict cardiovascular disease risk levels. However, developing the model by using more datasets and changing the default setting of WEKA, a data mining tool, will be the future work of this study.

Healthy populations contribute significantly to a country's economic success because they can live longer, be more productive, and save more. Unfortunately, Ethiopia has a poor health status and weak health care systems and infrastructure, compared to other low-income countries, even within Sub-Saharan Africa [1]. The low life expectancy in Ethiopia, only 58.37 years, results from poor health facilities [2]. Modern diagnostic equipment typically needs sophisticated infrastructure, consistent electrical power, expensive reagents, lengthy examination duration, and skilled professionals, none of which are available in environments with minimal resources [3]. The failure to correctly diagnose many communicable and non-communicable illnesses also plays a crucial role in mortality. This is far higher in developing countries than in developed countries.

Non-communicable diseases (NCDs), such as cancer, chronic respiratory conditions, cardiovascular, and diabetes, cause the death of over 40 million people each year, accounting for 70% of all deaths worldwide [4]. Over 80% of these deaths affect 15 million people between the ages of 30 and 69 every year in low and middle-income countries.

In the world, cancers account for 8.8 million deaths from NCDs each year, followed by respiratory (3.9 million), diabetes (1.6 million), and cardiovascular diseases (17.7 million) [4]. A few decades ago, it was considered to be a developed-nation issue. However, reports indicate that it is becoming a significant health issue for middle- and low-income countries. It estimated the total economic losses in low- and middle-income countries between 2011 and 2025 at USD 7 trillion [5]. In addition, it is the second largest cause of death overall, and the primary cause of death for people over 30 is CVD [6].

According to the Global Burden of Disease research, CVD caused roughly 32% of all deaths globally in 2013, with around 80% of these deaths happening in low- and middle-income countries [7]. Heart failure, rheumatic heart disease, congenital heart disease, ischemic heart disease, cerebrovascular disease, and peripheral vascular disease are the six different types of cardiovascular diseases. In most Sub-Saharan African countries, rheumatic heart disease was the most frequent cardiovascular disease, followed by hypertensive heart disease, but little was known about the pattern of congestive heart failure in Ethiopia. However, in a study done by [8] at Black Lion Specialized Hospital medical ward to evaluate the severity of rheumatic heart disease, it was found that this heart disease was a common cause of mortality (26.5%). Additionally, the most prevalent primary diagnosis in Ethiopia was rheumatic heart disease (62%), with a substantially more significant proportion in the third decade of life [9]. According to another study, this condition is the main contributor to cardiovascular disease and mainly affects young people [10].

A large fraction of the cases was also caused by hypertensive and ischemic cardiac conditions [11]. Cardiovascular diseases (CVDs) generally place a significant financial and health burden on developing nations. Risk variables include age, echocardiogram, heart rate, diabetes, slope, hypertension, high cholesterol, and physical inactivity are the critical factors used to predict heart disease. Many of these obvious risk factors are present in heart disease patients, making it possible to identify them promptly. The major CVD risk factors are elevated blood pressure, obesity, and physical inactivity were more prevalent in urban populations, while binge drinking and cigarette smoking were more prevalent in rural areas [12].

Most CVD cases depend on a complicated combination of clinical and pathological evidence for risk level prediction. Therefore, it is essential to have the correct diagnosis as soon as possible. The risk level categories for a 10-year total risk of a fatal or non-fatal CVD event include 10% classed as "low risk," 10- 20% as "moderate risk," 20- 30% as high risk, and 30% as "very high risk," according to (WHO, 2017) [13].

Cardiologists are highly scarce in developing nations, as is the situation with Ethiopia's primary-level hospitals (sometimes referred to as district hospitals). It is challenging to make accurate diagnoses and administer the proper treatments in Ethiopia and other developing nations when there is a lack of facilities, such as lab equipment and professionals. Furthermore, because primary-level hospitals lack advanced medical technology and lack the competence needed to perform high-quality medical procedures, forecasting the risk level of CVD is hardly ever attainable.

The decision support system (DSS) can be beneficial in predicting heart disease risk [14]. It can lessen medical errors that result in fatalities, improve patient safety, and save lives [15]. Additionally, it can deliver knowledge and person-specific information intelligently filtered and given at the right time to physicians, staff, patients, and others to improve healthcare [16]. Implementing DSS serves to support rather than replace [17]. The cost-effectiveness of DSS implementation should also be taken into consideration by low- to medium-income countries [18]. DSS to be utilized at point-of-care (POC) can save hundreds of thousands of lives per year in environments with low resources or where it is exceedingly difficult to physically access critical facilities.

Healthcare organizations that use data mining technologies have the power to predict future patient needs, wants, and conditions in order to make appropriate and effective treatment decisions. Data mining techniques are particularly effective and efficient for building DSS [19]. Healthcare professionals receive incredible knowledge, support and experience through predictive data mining. A prediction algorithm's goal is to predict future values based on historical data. To provide precise and trustworthy aid in developing prognosis, diagnosis, and treatment planning processes. Neural networks, regression, support vector machines (SVM), and discriminant analysis are a few examples of standard prediction algorithms.

Recently, control and failure detection tasks have been predicted using data mining approaches such as neural networks, fuzzy logic systems, evolutionary algorithms, and rough set theory [21]. Based on these clinical parameters, the DSS algorithm created for the prediction of heart disease produces prediction accuracy that is close to 80%. (Chen, 2011). Additionally, the proposed method created by [22] employing neural networks predicted the CVD risk with a 98.57

Study Sites

The data were collected from different public specialized hospitals in Ethiopia. It includes Jimma University, Black Lion Specialized Hospital and Alert Specialized Hospitals. These hospitals were selected purposely due to their provision of advanced treatment for the diseases.

Study population

The populations of the study were domain experts. Domains experts were to identify risk factors, validate extracted rules and perform user acceptance testing.

Data type and sources

For this research, both primary and secondary data were used. Secondary data were collected from books, journal articles and websites, while primary data were collected from domain experts and patient records.

Sampling

A purposive sampling technique was used to select the hospitals, and patients' records were systematically selected. For the selection of the domain experts, a purposive sampling technique was employed by considering their specialization and experience in the area.

Data collection method

Necessary data were collected by interviewing the professionals in the area to get detailed information about risk factors of CVDs, validate extracted rules and questionnaire for user acceptance testing. In addition, patients' cases of cardiovascular disease were collected from hardcopies of patients' history from the hospitals mentioned above.

Accordingly, the following cardiovascular disease risk factors were identified as depicted in table 1.

| No. | Risk factors | Description |

| Gender | male/female | |

| Age | Years | |

| Total Cholesterol | mmol/L | |

| HDL Cholesterol | mmol/L | |

| Systolic blood pressure | mm HG | |

Blood pressure treatment (anti-hypertensives prescribed) | yes/no | |

| Smoking | yes/no | |

| Diabetes | yes/no | |

| Body Mass Index | kg/m2 | |

| LDL cholesterol | mmol/L | |

| Triglycerides | mmol/L | |

| C-reactive protein (CRP) | mg/L | |

| Serum fibrinogen | g/l | |

| Gamma glutamyl transferase (gamma GT) | IU/L | |

| Serum creatinine | g/L | |

| Glycated haemoglobin (HbA1c) | % | |

| Forced Expiratory Volume (FEV1) | % | |

| AST/ALT ratio | - | |

| Family history of CHD < 60> | yes/no | |

| Townsend deprivation index | 1st quintile (most af¯uent)± 5th quintile (most deprived); unknown | |

| Hypertension | yes/no | |

| Rheumatoid arthritis | yes/no | |

| Atrial fibrillation yes/no | yes/no | |

| Chronic obstructive pulmonary disease (COPD) | yes/no | |

| Severe mental illness | yes/no | |

| Prescribed anti-psychotic drug | yes/no | |

| Prescribed oral corticosteroids | yes/no | |

| Prescribed immunosuppressant | yes/no |

Table 1: Cardiovascular risk factors

Source (Weng et al., 2017)

The study has several components, including building the model and developing a decision support system. The dataset was processed using the WEKA tool, and different data mining classifiers were applied to build the model. In addition, the cross-Industry Standard Process for Data Mining (CRISP-DM) method was employed. This method includes business understanding, data understanding, data preparation, modelling, evaluation and deployment. The data were acquired from different hospitals in Ethiopia to provide evidence for generating the rule sets used during the decision support process. Today's healthcare industry creates massive amounts of complex data on patients, hospitals, disease diagnoses, electronic patient records, and medical gadgets. Data mining is a technique for uncovering previously unknown patterns and trends in databases to develop predictive models. A large amount of data is a crucial resource to be processed and analyzed for knowledge extraction and support cost savings and decision-making.

The extracted rules, which were done by using a data mining technique, were validated by experts. Then DSS for risk level prediction of cardiovascular diseases was developed by extracted rules. WEKA software and Visual C# studio programming were used for risk level model and system development, respectively. Model performance testing was done by experimenting using different data mining classifiers and calculated by using recall, precision and F-Measure. Finally, the user acceptance testing was conducted by participating professionals. Accordingly, user acceptance testing data were collected using a questionnaire, which was analysed using the ResQue (Recommender Systems' Quality of user experience) model.

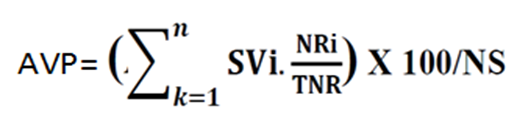

Where, AVP is average performance scale value (SV) and, TNR is total number of respondents, NS is number of scale and NR is number of respondents.

Then the result of user acceptance average performance is calculated out 100%.

This study's objective was to develop a risk level prediction system for cardiovascular diseases using data mining techniques. For this study, data were collected from a total of 4004 patients' records from Jimma University, Black Lion and Alert Specialized Hospitals. Then, it was coded in excel and changed into a file in the format Weka software understands: Comma delimited (.CSV) data file. Then, the data were inserted into Weka to preprocess and remove redundancies, fill missed values, correct related values to the attributes, and type missed values. Then, the first scenario experiments were done with all data and all attributes by using classifier algorithms. After this scenario attributes, a section was done on the preprocessed data. In the end, 11 attributes were selected from 31 attributes. Then, using the selected 11 attributes, the second scenario was performed using different classification algorisms like Naïve Bayes, PART and J48. Finally, unpruned J48 decision tree algorism was used to construct the model.

Many experiments were done on this data for preprocesses, attribute selection and model development. While information gain, CFS subset evaluator and information gain ratio were done for attributes selection, Naïve Bayes, PART and J48 were performed for classifications. For this study, as different scholars used it, 10-fold cross-validation was employed. For instance, it is recommended that 10-fold-validation is the correct number of folds to get the best estimate of error, and there is also some theoretical evidence that backs this up, like 10-fold cross-validation has become the standard method in practical terms. In addition, to select the best algorithms, the researchers selected a confusion matrix to calculate precision, recall and F-Measure. Since F-measure (also known as F1 or F-score) is a measure of a test's accuracy, it considers both the test's precision and recalls to compute the score. It can be interpreted as a weighted average of the precision and the recall, where 1 is its best value and 0 is its worst. The F-Measure only produces a high result when precision and recall are balanced; thus, using this technique is very significant. Moreover, the researchers used a 70% split test. The details of the experiments were discussed as follows.

Experiment

This sub-section is the backbone of the study; the results are presented and discussed. To achieve this study's objective, different experiments were done using data mining algorithms. However, PART induction decisions, J48, and Naïve Bayes classifiers algorithms showed good performance compared to the rest of the data mining classifiers. Therefore, the experiments of those algorithms were presented and discussed as follows by categorizing them into two big scenarios/experiments with all trained data and with the selected attributes.

Model Building Using Naïve Bayes classifier with all the training data

Naïve Bayes classifier use estimator classes. The precision levels of numeric estimators are determined by analyzing the training data. Based on this concept, correctly classified instances were 2831(70.7043%), and incorrectly classified instances were 1173(29.2957%). This was confirmed by confusion matrix results generated, which are presented by shaded cell values 2831, equal with correctly classified instances, and unshaded cell values 1179, equal with incorrectly classified instances, as presented in table 2. In addition, Naïve Bayes produced 0.706 F-Measure. This was relatively poor compared to the two algorithms.

| Low | High | Medium | |

| 2099 | 55 | 315 | Low |

| 75 | 239 | 371 | High |

| 287 | 70 | 493 | Medium |

Table 2: Naïve Bayes Confusion Matrix

Model Building Using PART classifier

The experiment was conducted by using a PART classifier. By this classifier, two scenarios were applied: pruned PART rule induction and unpruned PART rule induction classifier.

In the first scenario, the algorithm pruned PART rule induction containing 4004 instances with 31 attributes was performed. It took 2.48 seconds to build the model and generated 154 numbers of rules. It is presented in table 3 below. In addition, the model built with pruned PART rule induction with all attributes correctly classified (predicted the correct outcome) 3441(85.9391%) instances while 563(14.0609%) of the instances were classified incorrectly. In addition, the accuracy of the algorithms by F-Measure produced 0.857 performances. Therefore, it can be said that pruned PART rule induction showed good performance.

In the second scenario, unpruned PART rule induction with all data and attributes was performed. It took 17.11 seconds to build the model and generated 763 rules. In addition, correctly classified instances 3577(89.3357%) and incorrectly classified instances 4279 (10.6643 %). In addition, it showed a 0.893 F-Measure. As a result, unpruned PART rule induction showed the best performance compared to Naïve Bayes and pruned PART rule induction.

Model Building Using J48 classifier

This classifier also has two experiments J48 pruned decision tree with all attributes and a J48 unpruned decision tree with all attributes. J48 is another popular data mining algorithm. J48 pruned decision tree was used in this first experiment. This algorithm showed 251 numbers of leaves, 394 sizes of the tree and 0.41 seconds to build the model. In addition, correctly classified instances 3432 (85.7143 %), incorrectly classified instances 572 (14.2857 %). Moreover, the F-Measure generated by algorism was 0.856.

In the second experiment, J48 unpruned decision tree which generates 952 numbers of leaves, 1416 sizes of the tree and 0.35 seconds to build the model. Adding, correctly classified instances were about 3580 (89.4106 %) incorrectly classified instances were 424 (10.5894 %). Moreover, this algorithm produced 0.893 F-Measure. In conclusion, based on the experiment done for all data and attributes, the J48 unpruned decision tree showed the best performance. The summary is depicted in below table 3.

| Type of classifier | TP | FP | Precision | Recall | F-Measure |

| Naïve Bayes with all attributes | 0.707 | 0.198 | 0.727 | 0.706 | 0.706 |

| Pruned PART rule induction with all attributes | 0.859 | 0.125 | 0.857 | 0.859 | 0.857 |

| Unpruned PART rule induction with all attributes | 0.887 | 0.098 | 0.887 | 0.887 | 0.886 |

| J48 pruned decision tree with all attributes | 0.857 | 0.117 | 0.857 | 0.857 | 0.856 |

| J48 unpruned decision tree with all attributes | 0.894 | 0.096 | 0.893 | 0.894 | 0.893 |

Table 3: Summary of the performance of all algorithms used to build models

Attributes selection

Attributes selection is essential to select necessary attributes and remove insignificant attributes to develop a clear and good model. Therefore, to select significant attributes WEKA, the data mining tool has different techniques such as correlation-based feature selection (CFS) subset evaluator, classifier attribute eval, classifier subset evaluator, correlation attribute eval, gain ratio attribute eval and information gain attribute eval. All the experiments were done by algorithms which the WEKA tool support but correlation-based feature selection (CFS) subset evaluator, gain ratio attribute eval, and information gain attribute eval produced excellent performance compared to the rest of the algorithms. The experiment of these algorithms is briefly described below.

CfsSubsetEval: Correlation-based Feature Selection (CFS) Subset Evaluator

CFS Subset Evaluator evaluates the worth of a subset of attributes by considering each feature's individual predictive ability and the degree of redundancy between them. As a result of this technique, only seven attributes were selected from 31 attributes, i.e., age, smoking, family history of coronary heart disease < 60>

Figure 1: Attribute selection by Cf s Subset Eval

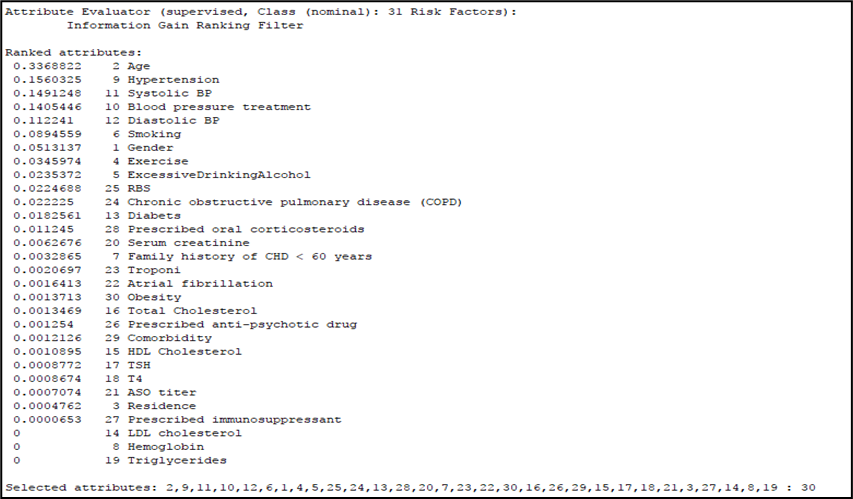

Information gain

Information gain evaluates the worth of an attribute by measuring the information gained with respect to the class. It gives the rank for all attributes based on their information gain. The highest information gain is at the top of the list, while the lowest information gain is at the bottom. The best attribute is the one which has an information gain of one (1), and the worst is zero (0). In addition, the attributes were selected by average information gain; only those with greater than average information gain were identified as good attributes for model development. Accordingly, age, hypertension, systolic blood pressure, blood pressure treatment, diastolic blood pressure, smoking and gender were the attributes selected, which produced information again greater than average, i.e. 0.04426, as depicted in figure 2 below.

Figure 2: Attributes selection by Information gain

Information gain ratio

Information gain ratio evaluates the worth of an attribute by measuring the gain ratio with respect to the class. Using an information gain ratio algorithm for attributes selection of smoking, hypertension, blood pressure, treatment, excessive drinking of alcohol, age, diabetes, systolic blood pressure, diastolic blood pressure, exercise, chronic obstructive pulmonary disease and gender. This indicates that from 31 risk factors of cardiovascular diseases, only 11 attributes were selected by the information gain ratio technique (Figure 3).

In conclusion, after the three experiments, some of the attributes were identified by the three algorithms, information gain ratio almost covered attributes selected by both information again and (CFS) Subset Evaluator and included more attributes than the two algorithms. Based on these results, the information gain ratio was selected as the best algorithm for attribute selection. Finally, the model was developed using smoking, hypertension, blood pressure treatment, excessive drinking of alcohol, age, diabetes, systolic blood pressure, diastolic blood pressure, exercise, chronic obstructive pulmonary disease and gender.

Figure 3: Attributes selection by Information gain ratio

Experiments with selected attributes

The algorithm was run on a complete training set containing 4004 instances with the selected 11 attributes by Naïve Bayes, pruned PART rule induction, unpruned PART rule induction, pruned J48 decision tree and unpruned J48 decision tree Classifier.

Model Building Using Naïve Bayes Classifier selected attributes

In the second scenario, the algorithm was run on a complete training set containing 4004 instances with selected 11 attributes. It took 0.02 seconds to build the model, and the model generated correctly classified instances 2897 (72.3526 %), incorrectly classified instances 1107 (27.6474 %.) and 0.708 F-Measure.

Model Building Using PART rule induction

Using pruned PART rule induction, 182 rules were generated, and it took 0.63 seconds to build the model, correctly classified instances 3478 (86.8631 %) and incorrectly classified instances 526 (13.1369 %), and 0.867 F-Measure.

In addition, the data were tested by unpruned PART rule induction, which generated 349 rules in 1.2 seconds to build the model, correctly classified instances 3457 (86.3387%) and incorrectly classified instances 547 (13.6613 %), and 0.861 F-Measure.

Therefore, it is possible to conclude that from the two-part rule induction, pruned PART rule induction yielded better performance. Moreover, not only with unpruned PART rule induction, it also provided better performance than naïve Bayes.

Model Building Using J48 decision tree Classifier

J48 decision tree classifier is another algorithm for data mining to develop a model. Since then, the J48 decision tree classifier has been used to determine the most appropriate model for the data. It has two techniques pruned J48 decision tree classifier and an unpruned J48 decision tree classifier. By the first technique, pruned J48 decision tree classifier, the experiment showed 131 number leaves, 261 sizes of the tree, in 0.11 seconds to build the model, correctly classified instances 3385 (84.5405 %), incorrectly classified instances 619 (15.4595 %) and 0.843 F-Measure. By the second unpruned J48 decision tree classifier, 344 number of leaves, 687 sizes of the tree, 0.1 seconds to build model, correctly classified instances 3517 (87.8372 %), incorrectly classified instances 487 (12.1628 %) and 0.877 F-Measure were generated. In conclusion, the unpruned J48 decision tree classifier was the best algorithm to develop the expected model. The summary of the algorithm is presented in table 4 below, and a comparison of Naïve Bayes, PART rule induction and J48 decision tree models with selected attributes is shown in table 5.

| Low | High | Medium | |

| 2348 | 32 | 89 | Low |

| 59 | 540 | 86 | High |

| 174 | 47 | 629 | Medium |

Table 4: Confusion Matrix

| Type of classifier | TP | FP | Precision | Recall | F-Measure |

| Naïve Bayes with selected attributes | 0.724 | 0.228 | 0.701 | 0.724 | 0.708 |

| Pruned PART rule induction with selected attributes | 0.869 | 0.121 | 0.866 | 0.869 | 0.867 |

| Un pruned PART rule induction with selected attributes | 0.863 | 0.119 | 0.860 | 0.863 | 0.861 |

| J48 pruned decision tree with selected attributes | 0.845 | 0.130 | 0.842 | 0.845 | 0.843 |

| J48 unpruned decision tree with selected attributes | 0.878 | 0.109 | 0.876 | 0.878 | 0.877 |

Table 5: Comparison of Naïve Bayes, PART rule induction and J48 decision tree models with selected attributes

Sample rules generated by J48 unpruned decision tree

The following rules were retrieved for prototype system development from a total of 344 rules generated by the J48 unpruned decision tree method.

Age <= 47

| Hypertension = N

| | Smoking = N

| | | Chronic obstructive pulmonary disease = N

| | | | Diabetes = N

| | | | | Systolic Blood Pressure <= 130

| | | | | | Age <= 25: Low (1055.48/42.56)

| | | | | | Age > 25

| | | | | | | Age <= 26

| | | | | | | | Diastolic Blood Pressure <= 75: Low (14.99)

| | | | | | | Age > 20

| | | | | | | | Systolic Blood Pressure <= 165

| | | | | | | | | Systolic Blood Pressure <= 135: Medium (2.01/0.01)

| | | Age > 31

| | | | Exercise = N

| | | | | Chronic obstructive pulmonary disease (COPD) = N

| | | | | | Gender = F: Low (2.02)

| Hypertension = Y

| | Systolic Blood Pressure <= 135

| | | Diastolic Blood Pressure <= 85

| | | | Smoking = N

| | | | | Exercise = N

| | | | | | Age <= 33: Low (22.23/0.06)

| | | | | | Blood pressure treatment = Y

| | | | | | | Gender = F

| | | | | | | | Systolic Blood Pressure <= 175

| | | | | | | | | Systolic Blood Pressure <= 150

| | | | | | | | | | Systolic Blood Pressure <= 140.11

| | | | | | | | | | | Diastolic Blood Pressure <= 90

| | | | | | | | | | | | Age <= 31: Medium (2.0)

| | | | | | | | | Age > 29

| | | | | | | | | | Systolic Blood Pressure <= 165

| | | | | | | | | | | Systolic Blood Pressure <= 140.11: High (4.0/2.0)

| | | Smoking = Y

| | | | Age <= 34

| | | | | Diastolic Blood Pressure > 90: High (2.0/1.0)

Age > 47

| Systolic Blood Pressure <= 140.11

| | Smoking = N

| | | Age <= 58

| | | | Diabetes = N

| | | | | Diastolic Blood Pressure <= 90

| | | | | | Chronic obstructive pulmonary disease (COPD) = N

| | | | | | | Systolic Blood Pressure <= 135

| | | | | | | | Age <= 50: Low (157.4/17.0)

| | | | Diabetes = Y

| | | | | Gender = F

| | | | | | Diastolic Blood Pressure <= 75: High (3.0/1.0)

| | | Age > 58

| | | | Gender = F

| | | | | Diastolic Blood Pressure <= 50: Low (10.03)

| | | | | Diastolic Blood Pressure > 50

| | | | | | Hypertension = N

| | | | | | | Diabetes = N

| | | | | | | | Systolic Blood Pressure <= 115

| | | | | | | | | Exercise = N

| | | | | | | | | | Diastolic Blood Pressure <= 85

| | | | | | | | | | | Diastolic Blood Pressure <= 65: Low (38.05/12.0)

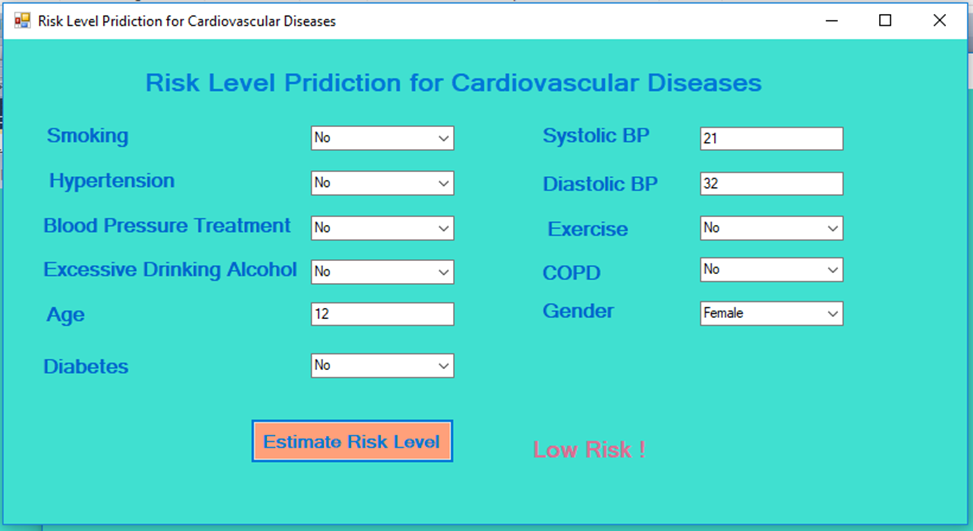

Decision support system for risk level prediction for cardiovascular diseases

Using extracted rules/patterns obtained by data mining classifiers algorithm that produced the best results, namely unpruned J48 decision tree, a user interface is developed, and the screenshot is depicted in figure 4 below, which can be used as a decision support system for risk level prediction for cardiovascular diseases.

Figure 4: Decision support system for risk level prediction for cardiovascular diseases.

Conclusion

This work aimed to create a model and system by employing data mining techniques which can predict the risk of cardiovascular diseases. Precision, recall, and the F-measure were used to assess the model performances. The training and test data samples were randomly selected using the 10-fold cross-validation method. The J48 unpruned classifier based on specified variables was found to be the most successful model to assess the risk level of patients with cardiovascular diseases since it accurately categorized 3580 (89.4106%) instances with an F-Measure value of 0.877.

Recommendations

The model was developed using the attributes which the model selected as well as confirmed by the domain experts and finally used to develop the decision support system to predict the risk level of cardiovascular diseases can be used as decision support where there is a lack of specialists of cardiovascular diseases esp., in the primary hospitals in Ethiopia. However, the total number of instances used for this study was four thousand four (4004). It is obvious that as the number of datasets increases, the performance of the model increase. Therefore, in future works adding the number of datasets and trying to increase the accuracy of the developed model is recommended. In addition, for the usability of the model, developing a knowledge-based system for risk level prediction by using extract rules is another exciting work.

The authors would like to acknowledge Jimma University for providing the fund to carry out this research and Jimma Medical Center for giving us the data. The authors are also grateful for the data collectors.

Clearly Auctoresonline and particularly Psychology and Mental Health Care Journal is dedicated to improving health care services for individuals and populations. The editorial boards' ability to efficiently recognize and share the global importance of health literacy with a variety of stakeholders. Auctoresonline publishing platform can be used to facilitate of optimal client-based services and should be added to health care professionals' repertoire of evidence-based health care resources.

Journal of Clinical Cardiology and Cardiovascular Intervention The submission and review process was adequate. However I think that the publication total value should have been enlightened in early fases. Thank you for all.

Journal of Women Health Care and Issues By the present mail, I want to say thank to you and tour colleagues for facilitating my published article. Specially thank you for the peer review process, support from the editorial office. I appreciate positively the quality of your journal.

Journal of Clinical Research and Reports I would be very delighted to submit my testimonial regarding the reviewer board and the editorial office. The reviewer board were accurate and helpful regarding any modifications for my manuscript. And the editorial office were very helpful and supportive in contacting and monitoring with any update and offering help. It was my pleasure to contribute with your promising Journal and I am looking forward for more collaboration.

We would like to thank the Journal of Thoracic Disease and Cardiothoracic Surgery because of the services they provided us for our articles. The peer-review process was done in a very excellent time manner, and the opinions of the reviewers helped us to improve our manuscript further. The editorial office had an outstanding correspondence with us and guided us in many ways. During a hard time of the pandemic that is affecting every one of us tremendously, the editorial office helped us make everything easier for publishing scientific work. Hope for a more scientific relationship with your Journal.

The peer-review process which consisted high quality queries on the paper. I did answer six reviewers’ questions and comments before the paper was accepted. The support from the editorial office is excellent.

Journal of Neuroscience and Neurological Surgery. I had the experience of publishing a research article recently. The whole process was simple from submission to publication. The reviewers made specific and valuable recommendations and corrections that improved the quality of my publication. I strongly recommend this Journal.

Dr. Katarzyna Byczkowska My testimonial covering: "The peer review process is quick and effective. The support from the editorial office is very professional and friendly. Quality of the Clinical Cardiology and Cardiovascular Interventions is scientific and publishes ground-breaking research on cardiology that is useful for other professionals in the field.

Thank you most sincerely, with regard to the support you have given in relation to the reviewing process and the processing of my article entitled "Large Cell Neuroendocrine Carcinoma of The Prostate Gland: A Review and Update" for publication in your esteemed Journal, Journal of Cancer Research and Cellular Therapeutics". The editorial team has been very supportive.

Testimony of Journal of Clinical Otorhinolaryngology: work with your Reviews has been a educational and constructive experience. The editorial office were very helpful and supportive. It was a pleasure to contribute to your Journal.

Dr. Bernard Terkimbi Utoo, I am happy to publish my scientific work in Journal of Women Health Care and Issues (JWHCI). The manuscript submission was seamless and peer review process was top notch. I was amazed that 4 reviewers worked on the manuscript which made it a highly technical, standard and excellent quality paper. I appreciate the format and consideration for the APC as well as the speed of publication. It is my pleasure to continue with this scientific relationship with the esteem JWHCI.

This is an acknowledgment for peer reviewers, editorial board of Journal of Clinical Research and Reports. They show a lot of consideration for us as publishers for our research article “Evaluation of the different factors associated with side effects of COVID-19 vaccination on medical students, Mutah university, Al-Karak, Jordan”, in a very professional and easy way. This journal is one of outstanding medical journal.

Dear Hao Jiang, to Journal of Nutrition and Food Processing We greatly appreciate the efficient, professional and rapid processing of our paper by your team. If there is anything else we should do, please do not hesitate to let us know. On behalf of my co-authors, we would like to express our great appreciation to editor and reviewers.

As an author who has recently published in the journal "Brain and Neurological Disorders". I am delighted to provide a testimonial on the peer review process, editorial office support, and the overall quality of the journal. The peer review process at Brain and Neurological Disorders is rigorous and meticulous, ensuring that only high-quality, evidence-based research is published. The reviewers are experts in their fields, and their comments and suggestions were constructive and helped improve the quality of my manuscript. The review process was timely and efficient, with clear communication from the editorial office at each stage. The support from the editorial office was exceptional throughout the entire process. The editorial staff was responsive, professional, and always willing to help. They provided valuable guidance on formatting, structure, and ethical considerations, making the submission process seamless. Moreover, they kept me informed about the status of my manuscript and provided timely updates, which made the process less stressful. The journal Brain and Neurological Disorders is of the highest quality, with a strong focus on publishing cutting-edge research in the field of neurology. The articles published in this journal are well-researched, rigorously peer-reviewed, and written by experts in the field. The journal maintains high standards, ensuring that readers are provided with the most up-to-date and reliable information on brain and neurological disorders. In conclusion, I had a wonderful experience publishing in Brain and Neurological Disorders. The peer review process was thorough, the editorial office provided exceptional support, and the journal's quality is second to none. I would highly recommend this journal to any researcher working in the field of neurology and brain disorders.

Dear Agrippa Hilda, Journal of Neuroscience and Neurological Surgery, Editorial Coordinator, I trust this message finds you well. I want to extend my appreciation for considering my article for publication in your esteemed journal. I am pleased to provide a testimonial regarding the peer review process and the support received from your editorial office. The peer review process for my paper was carried out in a highly professional and thorough manner. The feedback and comments provided by the authors were constructive and very useful in improving the quality of the manuscript. This rigorous assessment process undoubtedly contributes to the high standards maintained by your journal.

International Journal of Clinical Case Reports and Reviews. I strongly recommend to consider submitting your work to this high-quality journal. The support and availability of the Editorial staff is outstanding and the review process was both efficient and rigorous.

Thank you very much for publishing my Research Article titled “Comparing Treatment Outcome Of Allergic Rhinitis Patients After Using Fluticasone Nasal Spray And Nasal Douching" in the Journal of Clinical Otorhinolaryngology. As Medical Professionals we are immensely benefited from study of various informative Articles and Papers published in this high quality Journal. I look forward to enriching my knowledge by regular study of the Journal and contribute my future work in the field of ENT through the Journal for use by the medical fraternity. The support from the Editorial office was excellent and very prompt. I also welcome the comments received from the readers of my Research Article.

Dear Erica Kelsey, Editorial Coordinator of Cancer Research and Cellular Therapeutics Our team is very satisfied with the processing of our paper by your journal. That was fast, efficient, rigorous, but without unnecessary complications. We appreciated the very short time between the submission of the paper and its publication on line on your site.

I am very glad to say that the peer review process is very successful and fast and support from the Editorial Office. Therefore, I would like to continue our scientific relationship for a long time. And I especially thank you for your kindly attention towards my article. Have a good day!

"We recently published an article entitled “Influence of beta-Cyclodextrins upon the Degradation of Carbofuran Derivatives under Alkaline Conditions" in the Journal of “Pesticides and Biofertilizers” to show that the cyclodextrins protect the carbamates increasing their half-life time in the presence of basic conditions This will be very helpful to understand carbofuran behaviour in the analytical, agro-environmental and food areas. We greatly appreciated the interaction with the editor and the editorial team; we were particularly well accompanied during the course of the revision process, since all various steps towards publication were short and without delay".

I would like to express my gratitude towards you process of article review and submission. I found this to be very fair and expedient. Your follow up has been excellent. I have many publications in national and international journal and your process has been one of the best so far. Keep up the great work.

We are grateful for this opportunity to provide a glowing recommendation to the Journal of Psychiatry and Psychotherapy. We found that the editorial team were very supportive, helpful, kept us abreast of timelines and over all very professional in nature. The peer review process was rigorous, efficient and constructive that really enhanced our article submission. The experience with this journal remains one of our best ever and we look forward to providing future submissions in the near future.

I am very pleased to serve as EBM of the journal, I hope many years of my experience in stem cells can help the journal from one way or another. As we know, stem cells hold great potential for regenerative medicine, which are mostly used to promote the repair response of diseased, dysfunctional or injured tissue using stem cells or their derivatives. I think Stem Cell Research and Therapeutics International is a great platform to publish and share the understanding towards the biology and translational or clinical application of stem cells.

I would like to give my testimony in the support I have got by the peer review process and to support the editorial office where they were of asset to support young author like me to be encouraged to publish their work in your respected journal and globalize and share knowledge across the globe. I really give my great gratitude to your journal and the peer review including the editorial office.

I am delighted to publish our manuscript entitled "A Perspective on Cocaine Induced Stroke - Its Mechanisms and Management" in the Journal of Neuroscience and Neurological Surgery. The peer review process, support from the editorial office, and quality of the journal are excellent. The manuscripts published are of high quality and of excellent scientific value. I recommend this journal very much to colleagues.

Dr.Tania Muñoz, My experience as researcher and author of a review article in The Journal Clinical Cardiology and Interventions has been very enriching and stimulating. The editorial team is excellent, performs its work with absolute responsibility and delivery. They are proactive, dynamic and receptive to all proposals. Supporting at all times the vast universe of authors who choose them as an option for publication. The team of review specialists, members of the editorial board, are brilliant professionals, with remarkable performance in medical research and scientific methodology. Together they form a frontline team that consolidates the JCCI as a magnificent option for the publication and review of high-level medical articles and broad collective interest. I am honored to be able to share my review article and open to receive all your comments.

“The peer review process of JPMHC is quick and effective. Authors are benefited by good and professional reviewers with huge experience in the field of psychology and mental health. The support from the editorial office is very professional. People to contact to are friendly and happy to help and assist any query authors might have. Quality of the Journal is scientific and publishes ground-breaking research on mental health that is useful for other professionals in the field”.

Dear editorial department: On behalf of our team, I hereby certify the reliability and superiority of the International Journal of Clinical Case Reports and Reviews in the peer review process, editorial support, and journal quality. Firstly, the peer review process of the International Journal of Clinical Case Reports and Reviews is rigorous, fair, transparent, fast, and of high quality. The editorial department invites experts from relevant fields as anonymous reviewers to review all submitted manuscripts. These experts have rich academic backgrounds and experience, and can accurately evaluate the academic quality, originality, and suitability of manuscripts. The editorial department is committed to ensuring the rigor of the peer review process, while also making every effort to ensure a fast review cycle to meet the needs of authors and the academic community. Secondly, the editorial team of the International Journal of Clinical Case Reports and Reviews is composed of a group of senior scholars and professionals with rich experience and professional knowledge in related fields. The editorial department is committed to assisting authors in improving their manuscripts, ensuring their academic accuracy, clarity, and completeness. Editors actively collaborate with authors, providing useful suggestions and feedback to promote the improvement and development of the manuscript. We believe that the support of the editorial department is one of the key factors in ensuring the quality of the journal. Finally, the International Journal of Clinical Case Reports and Reviews is renowned for its high- quality articles and strict academic standards. The editorial department is committed to publishing innovative and academically valuable research results to promote the development and progress of related fields. The International Journal of Clinical Case Reports and Reviews is reasonably priced and ensures excellent service and quality ratio, allowing authors to obtain high-level academic publishing opportunities in an affordable manner. I hereby solemnly declare that the International Journal of Clinical Case Reports and Reviews has a high level of credibility and superiority in terms of peer review process, editorial support, reasonable fees, and journal quality. Sincerely, Rui Tao.

Clinical Cardiology and Cardiovascular Interventions I testity the covering of the peer review process, support from the editorial office, and quality of the journal.

Clinical Cardiology and Cardiovascular Interventions, we deeply appreciate the interest shown in our work and its publication. It has been a true pleasure to collaborate with you. The peer review process, as well as the support provided by the editorial office, have been exceptional, and the quality of the journal is very high, which was a determining factor in our decision to publish with you.

The peer reviewers process is quick and effective, the supports from editorial office is excellent, the quality of journal is high. I would like to collabroate with Internatioanl journal of Clinical Case Reports and Reviews journal clinically in the future time.

Clinical Cardiology and Cardiovascular Interventions, I would like to express my sincerest gratitude for the trust placed in our team for the publication in your journal. It has been a true pleasure to collaborate with you on this project. I am pleased to inform you that both the peer review process and the attention from the editorial coordination have been excellent. Your team has worked with dedication and professionalism to ensure that your publication meets the highest standards of quality. We are confident that this collaboration will result in mutual success, and we are eager to see the fruits of this shared effort.

Dear Dr. Jessica Magne, Editorial Coordinator 0f Clinical Cardiology and Cardiovascular Interventions, I hope this message finds you well. I want to express my utmost gratitude for your excellent work and for the dedication and speed in the publication process of my article titled "Navigating Innovation: Qualitative Insights on Using Technology for Health Education in Acute Coronary Syndrome Patients." I am very satisfied with the peer review process, the support from the editorial office, and the quality of the journal. I hope we can maintain our scientific relationship in the long term.

Dear Monica Gissare, - Editorial Coordinator of Nutrition and Food Processing. ¨My testimony with you is truly professional, with a positive response regarding the follow-up of the article and its review, you took into account my qualities and the importance of the topic¨.

Dear Dr. Jessica Magne, Editorial Coordinator 0f Clinical Cardiology and Cardiovascular Interventions, The review process for the article “The Handling of Anti-aggregants and Anticoagulants in the Oncologic Heart Patient Submitted to Surgery” was extremely rigorous and detailed. From the initial submission to the final acceptance, the editorial team at the “Journal of Clinical Cardiology and Cardiovascular Interventions” demonstrated a high level of professionalism and dedication. The reviewers provided constructive and detailed feedback, which was essential for improving the quality of our work. Communication was always clear and efficient, ensuring that all our questions were promptly addressed. The quality of the “Journal of Clinical Cardiology and Cardiovascular Interventions” is undeniable. It is a peer-reviewed, open-access publication dedicated exclusively to disseminating high-quality research in the field of clinical cardiology and cardiovascular interventions. The journal's impact factor is currently under evaluation, and it is indexed in reputable databases, which further reinforces its credibility and relevance in the scientific field. I highly recommend this journal to researchers looking for a reputable platform to publish their studies.

Dear Editorial Coordinator of the Journal of Nutrition and Food Processing! "I would like to thank the Journal of Nutrition and Food Processing for including and publishing my article. The peer review process was very quick, movement and precise. The Editorial Board has done an extremely conscientious job with much help, valuable comments and advices. I find the journal very valuable from a professional point of view, thank you very much for allowing me to be part of it and I would like to participate in the future!”

Dealing with The Journal of Neurology and Neurological Surgery was very smooth and comprehensive. The office staff took time to address my needs and the response from editors and the office was prompt and fair. I certainly hope to publish with this journal again.Their professionalism is apparent and more than satisfactory. Susan Weiner

My Testimonial Covering as fellowing: Lin-Show Chin. The peer reviewers process is quick and effective, the supports from editorial office is excellent, the quality of journal is high. I would like to collabroate with Internatioanl journal of Clinical Case Reports and Reviews.

My experience publishing in Psychology and Mental Health Care was exceptional. The peer review process was rigorous and constructive, with reviewers providing valuable insights that helped enhance the quality of our work. The editorial team was highly supportive and responsive, making the submission process smooth and efficient. The journal's commitment to high standards and academic rigor makes it a respected platform for quality research. I am grateful for the opportunity to publish in such a reputable journal.

My experience publishing in International Journal of Clinical Case Reports and Reviews was exceptional. I Come forth to Provide a Testimonial Covering the Peer Review Process and the editorial office for the Professional and Impartial Evaluation of the Manuscript.

I would like to offer my testimony in the support. I have received through the peer review process and support the editorial office where they are to support young authors like me, encourage them to publish their work in your esteemed journals, and globalize and share knowledge globally. I really appreciate your journal, peer review, and editorial office.

Dear Agrippa Hilda- Editorial Coordinator of Journal of Neuroscience and Neurological Surgery, "The peer review process was very quick and of high quality, which can also be seen in the articles in the journal. The collaboration with the editorial office was very good."

I would like to express my sincere gratitude for the support and efficiency provided by the editorial office throughout the publication process of my article, “Delayed Vulvar Metastases from Rectal Carcinoma: A Case Report.” I greatly appreciate the assistance and guidance I received from your team, which made the entire process smooth and efficient. The peer review process was thorough and constructive, contributing to the overall quality of the final article. I am very grateful for the high level of professionalism and commitment shown by the editorial staff, and I look forward to maintaining a long-term collaboration with the International Journal of Clinical Case Reports and Reviews.

To Dear Erin Aust, I would like to express my heartfelt appreciation for the opportunity to have my work published in this esteemed journal. The entire publication process was smooth and well-organized, and I am extremely satisfied with the final result. The Editorial Team demonstrated the utmost professionalism, providing prompt and insightful feedback throughout the review process. Their clear communication and constructive suggestions were invaluable in enhancing my manuscript, and their meticulous attention to detail and dedication to quality are truly commendable. Additionally, the support from the Editorial Office was exceptional. From the initial submission to the final publication, I was guided through every step of the process with great care and professionalism. The team's responsiveness and assistance made the entire experience both easy and stress-free. I am also deeply impressed by the quality and reputation of the journal. It is an honor to have my research featured in such a respected publication, and I am confident that it will make a meaningful contribution to the field.

"I am grateful for the opportunity of contributing to [International Journal of Clinical Case Reports and Reviews] and for the rigorous review process that enhances the quality of research published in your esteemed journal. I sincerely appreciate the time and effort of your team who have dedicatedly helped me in improvising changes and modifying my manuscript. The insightful comments and constructive feedback provided have been invaluable in refining and strengthening my work".

I thank the ‘Journal of Clinical Research and Reports’ for accepting this article for publication. This is a rigorously peer reviewed journal which is on all major global scientific data bases. I note the review process was prompt, thorough and professionally critical. It gave us an insight into a number of important scientific/statistical issues. The review prompted us to review the relevant literature again and look at the limitations of the study. The peer reviewers were open, clear in the instructions and the editorial team was very prompt in their communication. This journal certainly publishes quality research articles. I would recommend the journal for any future publications.

Dear Jessica Magne, with gratitude for the joint work. Fast process of receiving and processing the submitted scientific materials in “Clinical Cardiology and Cardiovascular Interventions”. High level of competence of the editors with clear and correct recommendations and ideas for enriching the article.

We found the peer review process quick and positive in its input. The support from the editorial officer has been very agile, always with the intention of improving the article and taking into account our subsequent corrections.

My article, titled 'No Way Out of the Smartphone Epidemic Without Considering the Insights of Brain Research,' has been republished in the International Journal of Clinical Case Reports and Reviews. The review process was seamless and professional, with the editors being both friendly and supportive. I am deeply grateful for their efforts.

To Dear Erin Aust – Editorial Coordinator of Journal of General Medicine and Clinical Practice! I declare that I am absolutely satisfied with your work carried out with great competence in following the manuscript during the various stages from its receipt, during the revision process to the final acceptance for publication. Thank Prof. Elvira Farina

Dear Jessica, and the super professional team of the ‘Clinical Cardiology and Cardiovascular Interventions’ I am sincerely grateful to the coordinated work of the journal team for the no problem with the submission of my manuscript: “Cardiometabolic Disorders in A Pregnant Woman with Severe Preeclampsia on the Background of Morbid Obesity (Case Report).” The review process by 5 experts was fast, and the comments were professional, which made it more specific and academic, and the process of publication and presentation of the article was excellent. I recommend that my colleagues publish articles in this journal, and I am interested in further scientific cooperation. Sincerely and best wishes, Dr. Oleg Golyanovskiy.

Dear Ashley Rosa, Editorial Coordinator of the journal - Psychology and Mental Health Care. " The process of obtaining publication of my article in the Psychology and Mental Health Journal was positive in all areas. The peer review process resulted in a number of valuable comments, the editorial process was collaborative and timely, and the quality of this journal has been quickly noticed, resulting in alternative journals contacting me to publish with them." Warm regards, Susan Anne Smith, PhD. Australian Breastfeeding Association.

Dear Jessica Magne, Editorial Coordinator, Clinical Cardiology and Cardiovascular Interventions, Auctores Publishing LLC. I appreciate the journal (JCCI) editorial office support, the entire team leads were always ready to help, not only on technical front but also on thorough process. Also, I should thank dear reviewers’ attention to detail and creative approach to teach me and bring new insights by their comments. Surely, more discussions and introduction of other hemodynamic devices would provide better prevention and management of shock states. Your efforts and dedication in presenting educational materials in this journal are commendable. Best wishes from, Farahnaz Fallahian.

Dear Maria Emerson, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews, Auctores Publishing LLC. I am delighted to have published our manuscript, "Acute Colonic Pseudo-Obstruction (ACPO): A rare but serious complication following caesarean section." I want to thank the editorial team, especially Maria Emerson, for their prompt review of the manuscript, quick responses to queries, and overall support. Yours sincerely Dr. Victor Olagundoye.

Dear Ashley Rosa, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews. Many thanks for publishing this manuscript after I lost confidence the editors were most helpful, more than other journals Best wishes from, Susan Anne Smith, PhD. Australian Breastfeeding Association.

Dear Agrippa Hilda, Editorial Coordinator, Journal of Neuroscience and Neurological Surgery. The entire process including article submission, review, revision, and publication was extremely easy. The journal editor was prompt and helpful, and the reviewers contributed to the quality of the paper. Thank you so much! Eric Nussbaum, MD

Dr Hala Al Shaikh This is to acknowledge that the peer review process for the article ’ A Novel Gnrh1 Gene Mutation in Four Omani Male Siblings, Presentation and Management ’ sent to the International Journal of Clinical Case Reports and Reviews was quick and smooth. The editorial office was prompt with easy communication.

Dear Erin Aust, Editorial Coordinator, Journal of General Medicine and Clinical Practice. We are pleased to share our experience with the “Journal of General Medicine and Clinical Practice”, following the successful publication of our article. The peer review process was thorough and constructive, helping to improve the clarity and quality of the manuscript. We are especially thankful to Ms. Erin Aust, the Editorial Coordinator, for her prompt communication and continuous support throughout the process. Her professionalism ensured a smooth and efficient publication experience. The journal upholds high editorial standards, and we highly recommend it to fellow researchers seeking a credible platform for their work. Best wishes By, Dr. Rakhi Mishra.

Dear Jessica Magne, Editorial Coordinator, Clinical Cardiology and Cardiovascular Interventions, Auctores Publishing LLC. The peer review process of the journal of Clinical Cardiology and Cardiovascular Interventions was excellent and fast, as was the support of the editorial office and the quality of the journal. Kind regards Walter F. Riesen Prof. Dr. Dr. h.c. Walter F. Riesen.

Dear Ashley Rosa, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews, Auctores Publishing LLC. Thank you for publishing our article, Exploring Clozapine's Efficacy in Managing Aggression: A Multiple Single-Case Study in Forensic Psychiatry in the international journal of clinical case reports and reviews. We found the peer review process very professional and efficient. The comments were constructive, and the whole process was efficient. On behalf of the co-authors, I would like to thank you for publishing this article. With regards, Dr. Jelle R. Lettinga.

Dear Clarissa Eric, Editorial Coordinator, Journal of Clinical Case Reports and Studies, I would like to express my deep admiration for the exceptional professionalism demonstrated by your journal. I am thoroughly impressed by the speed of the editorial process, the substantive and insightful reviews, and the meticulous preparation of the manuscript for publication. Additionally, I greatly appreciate the courteous and immediate responses from your editorial office to all my inquiries. Best Regards, Dariusz Ziora

Dear Chrystine Mejia, Editorial Coordinator, Journal of Neurodegeneration and Neurorehabilitation, Auctores Publishing LLC, We would like to thank the editorial team for the smooth and high-quality communication leading up to the publication of our article in the Journal of Neurodegeneration and Neurorehabilitation. The reviewers have extensive knowledge in the field, and their relevant questions helped to add value to our publication. Kind regards, Dr. Ravi Shrivastava.

Dear Clarissa Eric, Editorial Coordinator, Journal of Clinical Case Reports and Studies, Auctores Publishing LLC, USA Office: +1-(302)-520-2644. I would like to express my sincere appreciation for the efficient and professional handling of my case report by the ‘Journal of Clinical Case Reports and Studies’. The peer review process was not only fast but also highly constructive—the reviewers’ comments were clear, relevant, and greatly helped me improve the quality and clarity of my manuscript. I also received excellent support from the editorial office throughout the process. Communication was smooth and timely, and I felt well guided at every stage, from submission to publication. The overall quality and rigor of the journal are truly commendable. I am pleased to have published my work with Journal of Clinical Case Reports and Studies, and I look forward to future opportunities for collaboration. Sincerely, Aline Tollet, UCLouvain.

Dear Ms. Mayra Duenas, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews. “The International Journal of Clinical Case Reports and Reviews represented the “ideal house” to share with the research community a first experience with the use of the Simeox device for speech rehabilitation. High scientific reputation and attractive website communication were first determinants for the selection of this Journal, and the following submission process exceeded expectations: fast but highly professional peer review, great support by the editorial office, elegant graphic layout. Exactly what a dynamic research team - also composed by allied professionals - needs!" From, Chiara Beccaluva, PT - Italy.

Dear Maria Emerson, Editorial Coordinator, we have deeply appreciated the professionalism demonstrated by the International Journal of Clinical Case Reports and Reviews. The reviewers have extensive knowledge of our field and have been very efficient and fast in supporting the process. I am really looking forward to further collaboration. Thanks. Best regards, Dr. Claudio Ligresti

Dear Chrystine Mejia, Editorial Coordinator, Journal of Neurodegeneration and Neurorehabilitation. “The peer review process was efficient and constructive, and the editorial office provided excellent communication and support throughout. The journal ensures scientific rigor and high editorial standards, while also offering a smooth and timely publication process. We sincerely appreciate the work of the editorial team in facilitating the dissemination of innovative approaches such as the Bonori Method.” Best regards, Dr. Giselle Pentón-Rol.