AUCTORES

Globalize your Research

Review Article | DOI: https://doi.org/10.31579/2693-4779/198

IAE, 175-28, Goan-ro 51 beon-gil, Baegam-myeon, Cheoin-gu, Yongin-si, Gyeonggi-do, Korea

*Corresponding Author: Dongchan Lee, IAE, 175-28, Goan-ro 51 beon-gil, Baegam-myeon, Cheoin-gu, Yongin-si, Gyeonggi-do, Korea

Citation: Dongchan Lee, (2024), Design Considerations of Effective Haptic Device for Human-Robot Interaction under Virtual Reality and Embodiment, Clinical Research and Clinical Trials, 9(4); DOI:10.31579/2693-4779/198

Copyright: © 2024, Dongchan Lee. This is an open access article distributed under the Creative Commons Attribution License, which permits unrestricted use, distribution, and reproduction in any medium, provided the original work is properly cited.

Received: 10 March 2024 | Accepted: 15 March 2024 | Published: 25 March 2024

Keywords: smart haptic device; hri (human-robot interaction); virtual reality; virtual embodiment

Human-Robot Interaction Technology (HRI) is a technology that enables robots to assess interactive situations and user intentions, allowing them to plan appropriate responses and actions, facilitating smooth communication and collaboration. HRI involves a synergy of perception, judgment, and expression technologies, wherein robots emulate human perceptual, cognitive, and expressive capabilities to infuse vitality into the field of robotics. This core technology extends beyond personal service robots, finding application in professional service robots and various service sectors, exerting a profound impact on the entire field of integrated robotics industries.

In this paper, the focus lies on judgment and expression technologies for haptic suit-based remote control. HRI technology is pivotal for breathing life into robots by mimicking human perception, cognition, and expressive functions. The implications of HRI technology stretch across diverse applications, from personal service robots to professional service robots, making it a technology with significant ramifications for the entire field of robotic integration industries

HRI (Human-Robot Interaction Technology) is a convergence foundational technology aimed at achieving natural communication and seamless collaboration between humans and robots. In order to accomplish this goal, HRI researches methods and technologies that enable robots to accurately assess interactive situations and user intentions, considering context to express appropriate behaviors and responses. HRI technology shares a similarity with HCI (Human-Computer Interaction) in its approach, as it takes into account human perceptual, cognitive, and behavioral characteristics when designing interfaces and machines. However, HRI technology differ

s in that robot are inherently tangible and autonomous intelligent systems, fundamentally distinct from computers. Consequently, the bidirectional nature of interaction is more pronounced in robotics, and the diverse control levels required for robot operation set HRI technology apart from HCI in terms of research topics and technical approaches. [1-5]

s in that robot are inherently tangible and autonomous intelligent systems, fundamentally distinct from computers. Consequently, the bidirectional nature of interaction is more pronounced in robotics, and the diverse control levels required for robot operation set HRI technology apart from HCI in terms of research topics and technical approaches. [1-5]

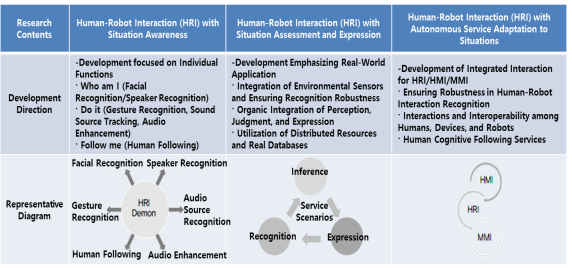

Major existing research encompasses situation awareness technology that infers user behavioral intentions, emotional states, personality, etc., situation prediction technology that anticipates the future states of interaction with users, and action planning technology that judges appropriate expressive

content and methods in context. Key functionalities include the generation and execution of action recipes, integrated 3D sensing for localization and mapping, learning for robot control, dynamic object tracking, and more.[1,2] This requires a knowledge framework that provides a method for generating robot action plans and executing them based on the generated plans by understanding changes in the robot's state and the environment in real-time.[3,4] It offers a mechanism to integrate and infer real-time changes in the robot's state, environmental variations, and diverse external knowledge, utilizing probabilistic inference methods such as MLN (Markov Logic Networks) and BN (Bayesian Networks) for uncertain situation modeling. Long-term interactions between users and robots, leading to the formation of social bonds, are studied to understand the differences in interaction between children and adults in daily life. Additionally, research is underway on sentiment, personalization, and personalized learning for growth through storage, experience, and personalized learning of long-term interactions and interaction episodes.[5] The conceptual organization of HRI technologies can be shown in Figure 1 and the detailed contents are as follows.

Figure 1 Conceptual organization of HRI technologies

(a) Perception Technology: In the context of HRI (Human-Robot Interaction) technology, 'perception' refers to the function of gathering perceptual information about the interactive counterpart and the surrounding environment through sensory organs. Perception technology utilizes various sensor devices to collect visual, auditory, tactile signals, etc., and analyzes data patterns relevant to interaction. Representative perception technologies include face recognition, expression recognition, gesture recognition, posture recognition, object recognition, object tracking, speech recognition, sound source recognition, timbre recognition, and touch gesture recognition. To implement perception technology, a diverse range of sensing devices such as cameras (RGB, thermal imaging, infrared, RGB-D, etc.), microphones, inertial sensors, touch sensors, actuators based on the concept of artificial muscles, and touch panels are employed. [6,7]

(b) Judgment Technology: 'Judgment' is the function of interpreting the meaning of perceptual information collected in the perception stage, understanding the interactive situation and the intentions of the counterpart, and planning expressions and actions in accordance with the context. HRI (Human-Robot Interaction) judgment technology can be considered a field that has not yet been systematically established. Judgment technology is grounded in the processes underlying the formation, operation, and development of the human mind, and there is currently no systematic theory to fully explain these processes. Judgment technology encompasses a wide range of cognitive skills, including the structure and operation of memory, representation and inference of knowledge, changes in emotions, problem-solving, learning, and development. It possesses a fundamental and strong technical nature. [6,7]

(c) Expression Technology: 'Expression' is the function of effectively and clearly manifesting planned actions and expressions in the interaction context through various means of representation, facilitated by the judgment function. For clear and effective expression, mechanical structures and control architecture technologies are required to manifest expressive actions. Additionally, behavior control technology is needed to generate expressive actions that can be naturally perceived by humans. Key research in expression technology includes the development of robots with high degrees of freedom and rich emotional expression in the face or head, expressive gestures using arms and the body, and conveying intentions and emotional expressions through Text-To-Speech (TTS) and sound. [6,7]

Moreover, there is extensive research in recent times on large-scale multimodal model representation technology that integrates and synchronizes various devices and expressive content to enhance clarity and

richness in expression. This involves altering emotional models in response to internal and external stimuli. External stimuli such as visual, tactile, auditory, temperature, and olfactory inputs, along with internal stimuli like hunger, self-preservation, and exploratory desires, impact the three axes of the emotional space (pleasantness, activation, certainty). This alteration influences emotions, allowing the expression of a total of seven emotions based on Ekman's basic six: 'Happiness,' 'Anger,' 'Disgust,' 'Fear,' 'Sadness,' 'Surprise,' and 'Neutral.' Research in this domain is grounded in human cognitive models to express human and social interactions. It explores the manifestation of internal motivations and demonstrates the ability to respond to the environment effectively and adaptively through natural emotional responses derived from environmental information. The research aims to develop cognitive systems for knowledge representation and knowledge extension, focusing on predicting situations through interaction with humans and addressing differences (novelty) and uncertainties (uncertainty) that arise in real environments. Self-understanding refers to representing the differences in beliefs and uncertainties that the robot possesses, while self-extension means expanding the knowledge the robot holds using experiences learned through action planning and execution. [6,7]

Haptic suits are a type of wearable device designed to provide users with tactile sensations, including pressure, texture, and temperature, enhancing the sense of touch. These suits establish direct contact with the user's skin to deliver tactile feedback, enriching interactions in virtual environments and creating a more immersive experience with virtual objects and surroundings. [11-13] The sense of touch engaged by haptic suits is considered a crucial non-verbal communication method in human interaction. Therefore, haptic suits are regarded not merely as information and communication technology but as a technology with social functions in human-computer or human-machine interaction. In particular, the social function of haptic suits can be understood through non-verbal social interactions in human society.[14] A haptic suit is a device designed to provide direct feedback to the user's body in virtual reality environments, enhancing the sense of touch. Various products of haptic suits have been introduced in the market. These suits envelop the entire body and utilize electrical stimulation to convey various tactile sensations. By applying a low-current close to the skin, they stimulate muscles to create sensations of vibration and pressure. Additionally, the suits incorporate temperature sensation capabilities, allowing users to feel changes in temperature within the virtual environment. These features contribute to providing users with a more realistic and immersive virtual experience. Alternatively, wireless haptic vests can be worn on the upper body without covering the entire torso. Using haptic motors, they transmit vibrations, enabling users to feel various physical interactions such as impacts, explosions, and contacts occurring in the virtual environment. [6,7]

The concept of "presence" refers to a psychological phenomenon that often occurs when experiencing mediated virtual environments, defined as the "sense of being with another" or the "sense of being there." While the concept of presence itself is often simplistically defined as the "sense of being there," the theoretical establishment and measurement of presence through survey tools have posed numerous conceptual and operational definition challenges arising from this phrase. Specifically, presence is defined as the "perceptual illusion of non-mediation." According to this definition, two fundamental conditions must be met for the establishment of presence: 1) the use of human sensory organs, and 2) the existence of technology mediating between humans and the experience. In other words, for presence to occur, the following two conditions must be fulfilled: First, conscious efforts using senses such as sight, smell, taste, hearing, and touch are necessary, and during these efforts, there must be an illusion of virtual experiences being perceived as real. Second, there is always technology involved between humans and experiences, but in the process of feeling presence, the technology itself should be unnoticed, much like a person accustomed to wearing glasses who may not consciously perceive the glasses while wearing them. In particular, it provides a crucial psychological mechanism for explaining why users of virtual reality content, especially through avatars, feel a distinct experience when using the virtual embodiment's body directly, as opposed to experiencing it through an avatar. The key insight lies in the phenomenon where visual information perceived by the eyes takes precedence over proprioceptive information, which is the body's response to external stimuli applied to muscles or joints. In virtual reality, if the avatar and the user's perspective align, and the manipulation of virtual controllers closely corresponds to real-world actions, it implies that a high level of ownership sense and subjective perception of virtual embodiment can be achieved.

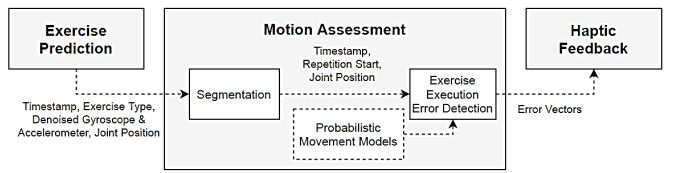

The fundamental approach for assessing movement involves the following steps: To evaluate motor skills during the execution of activities, the initial step is to identify the specific exercise the user is currently engaged in. Subsequently, the sensor data is segmented, and the initiation and conclusion of each repetition are determined. Segmentation is a crucial step for training probabilistic movement models that can recognize errors in exercise execution and subsequently deliver haptic feedback. The classification of exercises is based on the starting pose of each exercise. However, this approach is constrained by the variability in starting poses across the exercise pool and necessitates short breaks between repetitions. Alternatively, a training plan could be defined with predetermined exercises for users to follow, but this may be overly restrictive. Consequently, we opt for an approach where users can freely choose their exercises. For exercise classification, we employ support vector machines (SVM) to train models capable of distinguishing between exercise starting poses, repetitions, and movements unrelated to any exercise. [28]

Figure 2. General movement exercise prediction flowchart.

[28] Once the exercise has been predicted, and repetitions have been segmented, we proceed to train probabilistic movement models for the detection of exercise execution errors. Probabilistic movement models, which essentially represent a distribution over trajectories, have demonstrated promising results in the field of robotics. They are well-suited for human exercise assessment, as they can learn specific trajectories or movements based on expert demonstrations. Leveraging the advantages of imitation learning, we construct probabilistic movement models for each body part, utilizing joint positions obtained from the reconstructed avatar. The haptic suit plugin already estimates the human pose and applies the movements to a standardized avatar, eliminating the need to account for the user's height and limb lengths. This allows for the comparison of motor skills between subjects, irrespective of their height. The flowchart for movement assessment is depicted in Figure 4. [28]

Figure 3. Operational operation movement assessment flow chart [28]

Figure 4. Probabilistic model of trajectories, from a feature space (left column) to trajectories (right column) (A) Generative model. Equally spaced (radial) basis functions are amplitude scaled by a feature vector to approximate a one-dimensional trajectory. (B) Learning the correlations between multi-dimensional input trajectories.

The concept of imitation learning facilitates the real-time detection of exercise execution errors without the need to predefine specific error classes. This means that we identify execution errors by comparing users' observed motor skills with a probabilistic model derived from expert demonstrations. Unlike classification methods, which offer limited information on specific execution errors and require a priori knowledge of possible error classes, we opt to train probabilistic movement models for each Inertial Measurement Unit (IMU) or joint. Probabilistic models also empower us to identify subpar exercise performance as soon as the joint position exceeds the standard deviation of the reference execution. This approach, in particular, allows us to assess the severity of the execution error, enabling the provision of stronger haptic feedback for more significant errors. Additionally, Functional Electrical Stimulation involves applying electrical current pulses to excitable tissue to induce artificial contractions, enhancing or replacing motor functions in individuals with neurological impairments.

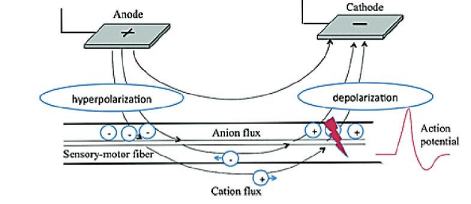

Working principle: The stimulation process involves applying electrical

current through a pair of electrodes positioned on the skin above sensory-motor structures. The electric field created between the two electrodes (anode and cathode) induces an ion flux in the tissue (Figure 6). Specifically, the anode, functioning as the positive electrode, imparts a positive charge to the cell membranes of neighboring neurons, resulting in an accumulation of negative ions and subsequent membrane hyperpolarization. In contrast, the cathode, serving as the negative electrode, attracts positive ions, leading to depolarization in the underlying membrane region. If the depolarization reaches a critical threshold, an action potential is generated, indistinguishable from a physiological one. This generated action potential propagates to the neuromuscular junction, causing muscle fibers to contract. It is essential to note that the charge threshold required for generating action potentials in muscle fibers is significantly higher than that for neurons. As a result, electrical stimulation predominantly activates nerves rather than muscles, underscoring the importance of intact lower motor neurons for the effectiveness of Functional Electrical Stimulation (FES) [18,28].

Figure 5 Neurophysiological principles of FES

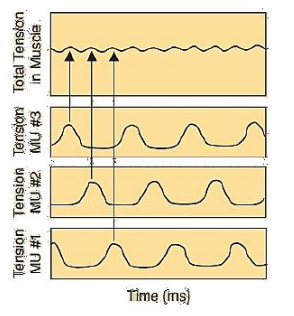

Muscle recruitment: During a contraction induced by Functional Electrical Stimulation (FES), muscle fibers are recruited in a manner distinct from physiological contractions. Primarily, motor units are recruited based on geometrical activation, starting from superficial layers at low current levels and progressing to deeper layers with increasing current amplitude [19]. Additionally, while voluntary movements involve sequential activation of motor units (asynchronous recruitment), FES recruits all motor units simultaneously (synchronous recruitment) [20,28]. In asynchronous recruitment, illustrated in Figure 6, motor units collaborate to maintain constant tension during muscle contraction (tetanic contraction), with adjacent units activated at a frequency of 6-8 Hz. This asynchronous recruitment helps mitigate the onset of muscle fatigue. Conversely, synchronous recruitment in FES requires much higher stimulation, up to 20-40 Hz, leading to an elevated rate of muscle fatigue associated with FES approaches [19]. Furthermore, FES follows a non-physiological recruitment pattern by activating fast-twitch fibers before slow-twitch ones. This occurs because the larger-diameter axons innervating fast-twitch fibers are more influenced by the stimulation-induced electric field than the smaller-diameter ones of slow-twitch fibers. The wider spacing between Ranvier nodes in fast-twitch fibers results in larger induced transmembrane voltage changes at the same charge level [18]. However, fast-twitch fibers tend to fatigue more quickly, contributing to the increased fatigue rate characteristic of FES-induced muscle contractions [19,28].

Figure 6 Summation of tension in motor units (MU) during asynchronous recruitment.

Pulse Shapes: The generation of the ionic flux is induced by the variation in the electric field. A relatively rapid rising edge is crucial for the current to induce excitation, necessitating a properly shaped wave or pulse [21]. Figure 7 illustrates some of the most common pulse waves used in Functional Electrical Stimulation (FES). These waveforms are categorized as monophasic and biphasic. Monophasic waveforms involve repeated unidirectional pulses, typically cathodic, while biphasic waveforms consist of repeated pulses with a cathodic phase followed by an anodic one [22,28]. In biphasic configurations, the positive pulse counterbalances the negative one, resulting in a net injected charge equal to zero, preventing potential damage at the electrode-tissue interface [22,28]. Within biphasic configurations, various pulse shapes exist, including symmetric, asymmetric, and balanced asymmetric. Symmetric pulses consist of two identical phases in terms of duration and amplitude but with opposite polarities. Conversely, asymmetric pulses involve phases with different durations and/or amplitudes [23]. Balanced asymmetric shapes are characterized by selecting parameters such that the total energy delivered to the body during the leading phase equals the total energy removed from the body during the trailing pulse, despite differing amplitude and duration [23,28].

Figure 7 Examples of commonly used pulse shapes used for functional electrical stimulation.

Stimulation Parameters: FES pulses are characterized by three parameters, as illustrated in Figure 8: pulse amplitude, pulse duration (or pulse width (PW)), and pulse frequency [23]. Pulse amplitude represents the magnitude

of the stimulation and directly influences the specific type of nerve fibers responding to it. As mentioned earlier, larger nerve fibers in close proximity to the stimulation electrode are recruited first [23]. Pulse duration, or pulse width, refers to the time duration of a single phase of the pulse. The strength of a pulse is determined by its charge level, defined as the product of pulse amplitude and duration. Therefore, the required duration for an effective pulse varies inversely with amplitude; in other words, to generate the same induced response, an increase in pulse duration requires a lower amplitude, and vice versa [26]. Each pulse with a proper charge level, inducing an action potential, produces a muscle twitch characterized by a sharp rise in force followed by a slower return to the relaxed state. Stimulation frequency is the rate at which stimulation pulses are delivered. Increasing the frequency leads to temporal summation of twitches, resulting in a higher mean generated force compared to that of single twitches [23]. Once the pulse frequency surpasses a certain value (typically 20 Hz), a sustained contraction (tetanic contraction) is achieved, where individual twitches are no longer distinguishable. Tetanic contraction, achieved with frequency values ranging from 20 to 50 Hz, is desirable in FES applications to provide high-quality movement. However, pulse frequency should not be excessively increased as it accelerates the onset of muscle fatigue. Thus, there is a trade-off in choosing this parameter [23]. Typical working values include a frequency between 20 and 50 Hz, pulse width between 100 and 500 μs, and amplitude between 10 and 125 mA [22,28].

Figure 8: Functional electrical stimulation parameters.

Stimulator Circuit: Electrical stimulators can deliver pulses controlled by either voltage or current. Voltage-controlled stimulators maintain a constant desired voltage between electrodes, without considering variations in tissue resistance. In instances of inadequate skin-electrode contact, resulting in increased resistance, these stimulators may experience a decrease in current, leading to a reduced muscle response. However, they do not pose a potential harm to the skin. On the other hand, current-controlled stimulators provide constant current pulses. In cases where there is a reduced effective electrode surface area, the current density increases with a rise in the voltage level. This elevation in current density could potentially lead to skin burns.[24] Motion capture module The motion capture module utilizes Inertial Measurement Unit (IMU) sensors, which are integrated into the suit and fixed in place (as illustrated in Figure 10). These sensors track, record, and monitor the movements and positioning of the users, generating a digital representation of the user in the form of an avatar. This technology finds applications in various fields such as animation creation (e.g., games,

movies), performance monitoring and capture (e.g., sports), as well as ergonomics and human factor testing for research and data analysis. [22,23,28]

Electrical stimulation module: The electrical stimulation module incorporates dry textile voltage-controlled electrodes, strategically embedded in the suit at anatomical locations, as depicted in Figure 10. These electrodes are paired into channels, with each channel corresponding to a specific muscle. Anodes and cathodes are distributed across these channels, and each channel comprises both an anode and a cathode, with some channels sharing the same anode. The electrodes have the capability to deliver Neuromuscular Electrical Stimulation pulses, inducing artificial muscle contractions, and Transcutaneous Electrical Nerve Stimulation to replicate haptic sensations. Through this system, the Haptic suit can provide physical feedback aligned with the visual simulation experienced in a virtual reality environment. [22,23,28]

Figure 9 Smart Haptic Feedback Suit [28]

Biometry module: The biometry module incorporates photoplethysmography (PPG) technology, offering data on the user's heart rate in beats per minute (BPM) and pulse rate variability (PRV). This functionality facilitates the development of interactive virtual reality training content that dynamically adjusts to the participant, providing personalized and tailored experiences. [22,23,28]

Benefits & Limitations: FES-based treatment presents several advantages in the rehabilitation process. It enables active muscle contractions, contributes to muscle strength improvement, prevents disuse and muscle atrophy, reduces spasticity and spasms, enhances the energy-efficient use of proximal limb muscles, and reduces energy expenditure associated with post-stroke activities [25]. Despite its peripheral application, studies have shown that FES can induce neurological changes and potentially aid in motor relearning when combined with residual voluntary inputs from the patient. This phenomenon, known as the "carry-over effect," is explained by Rushton's hypothesis, highlighting FES's unique feature of activating nerve fibers both orthodromically and antidromically, unlike physiological activation that exclusively operates orthodromically. [22,26,28]

However, FES has some limitations, including the non-linear relationship between injected current and induced muscle contraction, and the early onset of fatigue in stimulated muscles. This is attributed to the synchronous and inverted recruitment of motor units compared to physiological activation, restricting the long-term applicability of FES [27,28].

The judgment and expression technology for Human-Robot Interaction (HRI) is a crucial technology that endows robots with vitality by enabling them to assess interaction situations and user intentions. This is achieved through discerning appropriate responses and actions, facilitating communication and collaboration with humans. This technology is essential for the commercialization of service robots, outlining the development direction of HRI. Initially, research in HRI technology focused on individual functional units based on foundational technologies, aiming to improve performance in specific functionalities (such as face recognition, speaker recognition, gesture recognition, sound source tracking, and human tracking). However, these technologies were limited to well-structured environments, with low usability due to insufficient consideration of consumer demands in the immature market. As demand for HRI technology in real-world applications has increased across various fields, the research direction has shifted toward development focused on real-world applications.

In the future, it is anticipated that the development of recognition technology will transition from unit-function-based continuous monitoring to recognition technology integrating environmental sensors and distributed resources. Following recognition, there will be an organic integration of perception, judgment, and expression based on service scenarios to provide services. Moreover, HRI technology is expected to evolve into an open and market-oriented form, where market participants and technology providers share information and knowledge. This will enhance responsiveness to actual services through efficient utilization of computing resources. Robot services will take on a form where knowledge and resources are shared, reused, and virtualized, similar to the web. In this manner, robots and avatars can interact in virtual spaces, providing a core technology applicable across various fields such as education, healthcare, entertainment, and social safety, where humans and spaces are shared.

Figure 10: Development direction of HRI technology

“This research was supported by the Technology Innovation Program (20019115) funded by the Korea Planning & Evaluation Institute of Industrial Technology (KEIT) and the Ministry of Trade, Industry, & Energy (MOTIE, Korea)”.

Clearly Auctoresonline and particularly Psychology and Mental Health Care Journal is dedicated to improving health care services for individuals and populations. The editorial boards' ability to efficiently recognize and share the global importance of health literacy with a variety of stakeholders. Auctoresonline publishing platform can be used to facilitate of optimal client-based services and should be added to health care professionals' repertoire of evidence-based health care resources.

Journal of Clinical Cardiology and Cardiovascular Intervention The submission and review process was adequate. However I think that the publication total value should have been enlightened in early fases. Thank you for all.

Journal of Women Health Care and Issues By the present mail, I want to say thank to you and tour colleagues for facilitating my published article. Specially thank you for the peer review process, support from the editorial office. I appreciate positively the quality of your journal.

Journal of Clinical Research and Reports I would be very delighted to submit my testimonial regarding the reviewer board and the editorial office. The reviewer board were accurate and helpful regarding any modifications for my manuscript. And the editorial office were very helpful and supportive in contacting and monitoring with any update and offering help. It was my pleasure to contribute with your promising Journal and I am looking forward for more collaboration.

We would like to thank the Journal of Thoracic Disease and Cardiothoracic Surgery because of the services they provided us for our articles. The peer-review process was done in a very excellent time manner, and the opinions of the reviewers helped us to improve our manuscript further. The editorial office had an outstanding correspondence with us and guided us in many ways. During a hard time of the pandemic that is affecting every one of us tremendously, the editorial office helped us make everything easier for publishing scientific work. Hope for a more scientific relationship with your Journal.

The peer-review process which consisted high quality queries on the paper. I did answer six reviewers’ questions and comments before the paper was accepted. The support from the editorial office is excellent.

Journal of Neuroscience and Neurological Surgery. I had the experience of publishing a research article recently. The whole process was simple from submission to publication. The reviewers made specific and valuable recommendations and corrections that improved the quality of my publication. I strongly recommend this Journal.

Dr. Katarzyna Byczkowska My testimonial covering: "The peer review process is quick and effective. The support from the editorial office is very professional and friendly. Quality of the Clinical Cardiology and Cardiovascular Interventions is scientific and publishes ground-breaking research on cardiology that is useful for other professionals in the field.

Thank you most sincerely, with regard to the support you have given in relation to the reviewing process and the processing of my article entitled "Large Cell Neuroendocrine Carcinoma of The Prostate Gland: A Review and Update" for publication in your esteemed Journal, Journal of Cancer Research and Cellular Therapeutics". The editorial team has been very supportive.

Testimony of Journal of Clinical Otorhinolaryngology: work with your Reviews has been a educational and constructive experience. The editorial office were very helpful and supportive. It was a pleasure to contribute to your Journal.

Dr. Bernard Terkimbi Utoo, I am happy to publish my scientific work in Journal of Women Health Care and Issues (JWHCI). The manuscript submission was seamless and peer review process was top notch. I was amazed that 4 reviewers worked on the manuscript which made it a highly technical, standard and excellent quality paper. I appreciate the format and consideration for the APC as well as the speed of publication. It is my pleasure to continue with this scientific relationship with the esteem JWHCI.

This is an acknowledgment for peer reviewers, editorial board of Journal of Clinical Research and Reports. They show a lot of consideration for us as publishers for our research article “Evaluation of the different factors associated with side effects of COVID-19 vaccination on medical students, Mutah university, Al-Karak, Jordan”, in a very professional and easy way. This journal is one of outstanding medical journal.

Dear Hao Jiang, to Journal of Nutrition and Food Processing We greatly appreciate the efficient, professional and rapid processing of our paper by your team. If there is anything else we should do, please do not hesitate to let us know. On behalf of my co-authors, we would like to express our great appreciation to editor and reviewers.

As an author who has recently published in the journal "Brain and Neurological Disorders". I am delighted to provide a testimonial on the peer review process, editorial office support, and the overall quality of the journal. The peer review process at Brain and Neurological Disorders is rigorous and meticulous, ensuring that only high-quality, evidence-based research is published. The reviewers are experts in their fields, and their comments and suggestions were constructive and helped improve the quality of my manuscript. The review process was timely and efficient, with clear communication from the editorial office at each stage. The support from the editorial office was exceptional throughout the entire process. The editorial staff was responsive, professional, and always willing to help. They provided valuable guidance on formatting, structure, and ethical considerations, making the submission process seamless. Moreover, they kept me informed about the status of my manuscript and provided timely updates, which made the process less stressful. The journal Brain and Neurological Disorders is of the highest quality, with a strong focus on publishing cutting-edge research in the field of neurology. The articles published in this journal are well-researched, rigorously peer-reviewed, and written by experts in the field. The journal maintains high standards, ensuring that readers are provided with the most up-to-date and reliable information on brain and neurological disorders. In conclusion, I had a wonderful experience publishing in Brain and Neurological Disorders. The peer review process was thorough, the editorial office provided exceptional support, and the journal's quality is second to none. I would highly recommend this journal to any researcher working in the field of neurology and brain disorders.

Dear Agrippa Hilda, Journal of Neuroscience and Neurological Surgery, Editorial Coordinator, I trust this message finds you well. I want to extend my appreciation for considering my article for publication in your esteemed journal. I am pleased to provide a testimonial regarding the peer review process and the support received from your editorial office. The peer review process for my paper was carried out in a highly professional and thorough manner. The feedback and comments provided by the authors were constructive and very useful in improving the quality of the manuscript. This rigorous assessment process undoubtedly contributes to the high standards maintained by your journal.

International Journal of Clinical Case Reports and Reviews. I strongly recommend to consider submitting your work to this high-quality journal. The support and availability of the Editorial staff is outstanding and the review process was both efficient and rigorous.

Thank you very much for publishing my Research Article titled “Comparing Treatment Outcome Of Allergic Rhinitis Patients After Using Fluticasone Nasal Spray And Nasal Douching" in the Journal of Clinical Otorhinolaryngology. As Medical Professionals we are immensely benefited from study of various informative Articles and Papers published in this high quality Journal. I look forward to enriching my knowledge by regular study of the Journal and contribute my future work in the field of ENT through the Journal for use by the medical fraternity. The support from the Editorial office was excellent and very prompt. I also welcome the comments received from the readers of my Research Article.

Dear Erica Kelsey, Editorial Coordinator of Cancer Research and Cellular Therapeutics Our team is very satisfied with the processing of our paper by your journal. That was fast, efficient, rigorous, but without unnecessary complications. We appreciated the very short time between the submission of the paper and its publication on line on your site.

I am very glad to say that the peer review process is very successful and fast and support from the Editorial Office. Therefore, I would like to continue our scientific relationship for a long time. And I especially thank you for your kindly attention towards my article. Have a good day!

"We recently published an article entitled “Influence of beta-Cyclodextrins upon the Degradation of Carbofuran Derivatives under Alkaline Conditions" in the Journal of “Pesticides and Biofertilizers” to show that the cyclodextrins protect the carbamates increasing their half-life time in the presence of basic conditions This will be very helpful to understand carbofuran behaviour in the analytical, agro-environmental and food areas. We greatly appreciated the interaction with the editor and the editorial team; we were particularly well accompanied during the course of the revision process, since all various steps towards publication were short and without delay".

I would like to express my gratitude towards you process of article review and submission. I found this to be very fair and expedient. Your follow up has been excellent. I have many publications in national and international journal and your process has been one of the best so far. Keep up the great work.

We are grateful for this opportunity to provide a glowing recommendation to the Journal of Psychiatry and Psychotherapy. We found that the editorial team were very supportive, helpful, kept us abreast of timelines and over all very professional in nature. The peer review process was rigorous, efficient and constructive that really enhanced our article submission. The experience with this journal remains one of our best ever and we look forward to providing future submissions in the near future.

I am very pleased to serve as EBM of the journal, I hope many years of my experience in stem cells can help the journal from one way or another. As we know, stem cells hold great potential for regenerative medicine, which are mostly used to promote the repair response of diseased, dysfunctional or injured tissue using stem cells or their derivatives. I think Stem Cell Research and Therapeutics International is a great platform to publish and share the understanding towards the biology and translational or clinical application of stem cells.

I would like to give my testimony in the support I have got by the peer review process and to support the editorial office where they were of asset to support young author like me to be encouraged to publish their work in your respected journal and globalize and share knowledge across the globe. I really give my great gratitude to your journal and the peer review including the editorial office.

I am delighted to publish our manuscript entitled "A Perspective on Cocaine Induced Stroke - Its Mechanisms and Management" in the Journal of Neuroscience and Neurological Surgery. The peer review process, support from the editorial office, and quality of the journal are excellent. The manuscripts published are of high quality and of excellent scientific value. I recommend this journal very much to colleagues.

Dr.Tania Muñoz, My experience as researcher and author of a review article in The Journal Clinical Cardiology and Interventions has been very enriching and stimulating. The editorial team is excellent, performs its work with absolute responsibility and delivery. They are proactive, dynamic and receptive to all proposals. Supporting at all times the vast universe of authors who choose them as an option for publication. The team of review specialists, members of the editorial board, are brilliant professionals, with remarkable performance in medical research and scientific methodology. Together they form a frontline team that consolidates the JCCI as a magnificent option for the publication and review of high-level medical articles and broad collective interest. I am honored to be able to share my review article and open to receive all your comments.

“The peer review process of JPMHC is quick and effective. Authors are benefited by good and professional reviewers with huge experience in the field of psychology and mental health. The support from the editorial office is very professional. People to contact to are friendly and happy to help and assist any query authors might have. Quality of the Journal is scientific and publishes ground-breaking research on mental health that is useful for other professionals in the field”.

Dear editorial department: On behalf of our team, I hereby certify the reliability and superiority of the International Journal of Clinical Case Reports and Reviews in the peer review process, editorial support, and journal quality. Firstly, the peer review process of the International Journal of Clinical Case Reports and Reviews is rigorous, fair, transparent, fast, and of high quality. The editorial department invites experts from relevant fields as anonymous reviewers to review all submitted manuscripts. These experts have rich academic backgrounds and experience, and can accurately evaluate the academic quality, originality, and suitability of manuscripts. The editorial department is committed to ensuring the rigor of the peer review process, while also making every effort to ensure a fast review cycle to meet the needs of authors and the academic community. Secondly, the editorial team of the International Journal of Clinical Case Reports and Reviews is composed of a group of senior scholars and professionals with rich experience and professional knowledge in related fields. The editorial department is committed to assisting authors in improving their manuscripts, ensuring their academic accuracy, clarity, and completeness. Editors actively collaborate with authors, providing useful suggestions and feedback to promote the improvement and development of the manuscript. We believe that the support of the editorial department is one of the key factors in ensuring the quality of the journal. Finally, the International Journal of Clinical Case Reports and Reviews is renowned for its high- quality articles and strict academic standards. The editorial department is committed to publishing innovative and academically valuable research results to promote the development and progress of related fields. The International Journal of Clinical Case Reports and Reviews is reasonably priced and ensures excellent service and quality ratio, allowing authors to obtain high-level academic publishing opportunities in an affordable manner. I hereby solemnly declare that the International Journal of Clinical Case Reports and Reviews has a high level of credibility and superiority in terms of peer review process, editorial support, reasonable fees, and journal quality. Sincerely, Rui Tao.

Clinical Cardiology and Cardiovascular Interventions I testity the covering of the peer review process, support from the editorial office, and quality of the journal.

Clinical Cardiology and Cardiovascular Interventions, we deeply appreciate the interest shown in our work and its publication. It has been a true pleasure to collaborate with you. The peer review process, as well as the support provided by the editorial office, have been exceptional, and the quality of the journal is very high, which was a determining factor in our decision to publish with you.

The peer reviewers process is quick and effective, the supports from editorial office is excellent, the quality of journal is high. I would like to collabroate with Internatioanl journal of Clinical Case Reports and Reviews journal clinically in the future time.

Clinical Cardiology and Cardiovascular Interventions, I would like to express my sincerest gratitude for the trust placed in our team for the publication in your journal. It has been a true pleasure to collaborate with you on this project. I am pleased to inform you that both the peer review process and the attention from the editorial coordination have been excellent. Your team has worked with dedication and professionalism to ensure that your publication meets the highest standards of quality. We are confident that this collaboration will result in mutual success, and we are eager to see the fruits of this shared effort.

Dear Dr. Jessica Magne, Editorial Coordinator 0f Clinical Cardiology and Cardiovascular Interventions, I hope this message finds you well. I want to express my utmost gratitude for your excellent work and for the dedication and speed in the publication process of my article titled "Navigating Innovation: Qualitative Insights on Using Technology for Health Education in Acute Coronary Syndrome Patients." I am very satisfied with the peer review process, the support from the editorial office, and the quality of the journal. I hope we can maintain our scientific relationship in the long term.

Dear Monica Gissare, - Editorial Coordinator of Nutrition and Food Processing. ¨My testimony with you is truly professional, with a positive response regarding the follow-up of the article and its review, you took into account my qualities and the importance of the topic¨.

Dear Dr. Jessica Magne, Editorial Coordinator 0f Clinical Cardiology and Cardiovascular Interventions, The review process for the article “The Handling of Anti-aggregants and Anticoagulants in the Oncologic Heart Patient Submitted to Surgery” was extremely rigorous and detailed. From the initial submission to the final acceptance, the editorial team at the “Journal of Clinical Cardiology and Cardiovascular Interventions” demonstrated a high level of professionalism and dedication. The reviewers provided constructive and detailed feedback, which was essential for improving the quality of our work. Communication was always clear and efficient, ensuring that all our questions were promptly addressed. The quality of the “Journal of Clinical Cardiology and Cardiovascular Interventions” is undeniable. It is a peer-reviewed, open-access publication dedicated exclusively to disseminating high-quality research in the field of clinical cardiology and cardiovascular interventions. The journal's impact factor is currently under evaluation, and it is indexed in reputable databases, which further reinforces its credibility and relevance in the scientific field. I highly recommend this journal to researchers looking for a reputable platform to publish their studies.

Dear Editorial Coordinator of the Journal of Nutrition and Food Processing! "I would like to thank the Journal of Nutrition and Food Processing for including and publishing my article. The peer review process was very quick, movement and precise. The Editorial Board has done an extremely conscientious job with much help, valuable comments and advices. I find the journal very valuable from a professional point of view, thank you very much for allowing me to be part of it and I would like to participate in the future!”

Dealing with The Journal of Neurology and Neurological Surgery was very smooth and comprehensive. The office staff took time to address my needs and the response from editors and the office was prompt and fair. I certainly hope to publish with this journal again.Their professionalism is apparent and more than satisfactory. Susan Weiner

My Testimonial Covering as fellowing: Lin-Show Chin. The peer reviewers process is quick and effective, the supports from editorial office is excellent, the quality of journal is high. I would like to collabroate with Internatioanl journal of Clinical Case Reports and Reviews.

My experience publishing in Psychology and Mental Health Care was exceptional. The peer review process was rigorous and constructive, with reviewers providing valuable insights that helped enhance the quality of our work. The editorial team was highly supportive and responsive, making the submission process smooth and efficient. The journal's commitment to high standards and academic rigor makes it a respected platform for quality research. I am grateful for the opportunity to publish in such a reputable journal.

My experience publishing in International Journal of Clinical Case Reports and Reviews was exceptional. I Come forth to Provide a Testimonial Covering the Peer Review Process and the editorial office for the Professional and Impartial Evaluation of the Manuscript.

I would like to offer my testimony in the support. I have received through the peer review process and support the editorial office where they are to support young authors like me, encourage them to publish their work in your esteemed journals, and globalize and share knowledge globally. I really appreciate your journal, peer review, and editorial office.

Dear Agrippa Hilda- Editorial Coordinator of Journal of Neuroscience and Neurological Surgery, "The peer review process was very quick and of high quality, which can also be seen in the articles in the journal. The collaboration with the editorial office was very good."

I would like to express my sincere gratitude for the support and efficiency provided by the editorial office throughout the publication process of my article, “Delayed Vulvar Metastases from Rectal Carcinoma: A Case Report.” I greatly appreciate the assistance and guidance I received from your team, which made the entire process smooth and efficient. The peer review process was thorough and constructive, contributing to the overall quality of the final article. I am very grateful for the high level of professionalism and commitment shown by the editorial staff, and I look forward to maintaining a long-term collaboration with the International Journal of Clinical Case Reports and Reviews.

To Dear Erin Aust, I would like to express my heartfelt appreciation for the opportunity to have my work published in this esteemed journal. The entire publication process was smooth and well-organized, and I am extremely satisfied with the final result. The Editorial Team demonstrated the utmost professionalism, providing prompt and insightful feedback throughout the review process. Their clear communication and constructive suggestions were invaluable in enhancing my manuscript, and their meticulous attention to detail and dedication to quality are truly commendable. Additionally, the support from the Editorial Office was exceptional. From the initial submission to the final publication, I was guided through every step of the process with great care and professionalism. The team's responsiveness and assistance made the entire experience both easy and stress-free. I am also deeply impressed by the quality and reputation of the journal. It is an honor to have my research featured in such a respected publication, and I am confident that it will make a meaningful contribution to the field.

"I am grateful for the opportunity of contributing to [International Journal of Clinical Case Reports and Reviews] and for the rigorous review process that enhances the quality of research published in your esteemed journal. I sincerely appreciate the time and effort of your team who have dedicatedly helped me in improvising changes and modifying my manuscript. The insightful comments and constructive feedback provided have been invaluable in refining and strengthening my work".

I thank the ‘Journal of Clinical Research and Reports’ for accepting this article for publication. This is a rigorously peer reviewed journal which is on all major global scientific data bases. I note the review process was prompt, thorough and professionally critical. It gave us an insight into a number of important scientific/statistical issues. The review prompted us to review the relevant literature again and look at the limitations of the study. The peer reviewers were open, clear in the instructions and the editorial team was very prompt in their communication. This journal certainly publishes quality research articles. I would recommend the journal for any future publications.

Dear Jessica Magne, with gratitude for the joint work. Fast process of receiving and processing the submitted scientific materials in “Clinical Cardiology and Cardiovascular Interventions”. High level of competence of the editors with clear and correct recommendations and ideas for enriching the article.

We found the peer review process quick and positive in its input. The support from the editorial officer has been very agile, always with the intention of improving the article and taking into account our subsequent corrections.

My article, titled 'No Way Out of the Smartphone Epidemic Without Considering the Insights of Brain Research,' has been republished in the International Journal of Clinical Case Reports and Reviews. The review process was seamless and professional, with the editors being both friendly and supportive. I am deeply grateful for their efforts.

To Dear Erin Aust – Editorial Coordinator of Journal of General Medicine and Clinical Practice! I declare that I am absolutely satisfied with your work carried out with great competence in following the manuscript during the various stages from its receipt, during the revision process to the final acceptance for publication. Thank Prof. Elvira Farina

Dear Jessica, and the super professional team of the ‘Clinical Cardiology and Cardiovascular Interventions’ I am sincerely grateful to the coordinated work of the journal team for the no problem with the submission of my manuscript: “Cardiometabolic Disorders in A Pregnant Woman with Severe Preeclampsia on the Background of Morbid Obesity (Case Report).” The review process by 5 experts was fast, and the comments were professional, which made it more specific and academic, and the process of publication and presentation of the article was excellent. I recommend that my colleagues publish articles in this journal, and I am interested in further scientific cooperation. Sincerely and best wishes, Dr. Oleg Golyanovskiy.

Dear Ashley Rosa, Editorial Coordinator of the journal - Psychology and Mental Health Care. " The process of obtaining publication of my article in the Psychology and Mental Health Journal was positive in all areas. The peer review process resulted in a number of valuable comments, the editorial process was collaborative and timely, and the quality of this journal has been quickly noticed, resulting in alternative journals contacting me to publish with them." Warm regards, Susan Anne Smith, PhD. Australian Breastfeeding Association.

Dear Jessica Magne, Editorial Coordinator, Clinical Cardiology and Cardiovascular Interventions, Auctores Publishing LLC. I appreciate the journal (JCCI) editorial office support, the entire team leads were always ready to help, not only on technical front but also on thorough process. Also, I should thank dear reviewers’ attention to detail and creative approach to teach me and bring new insights by their comments. Surely, more discussions and introduction of other hemodynamic devices would provide better prevention and management of shock states. Your efforts and dedication in presenting educational materials in this journal are commendable. Best wishes from, Farahnaz Fallahian.

Dear Maria Emerson, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews, Auctores Publishing LLC. I am delighted to have published our manuscript, "Acute Colonic Pseudo-Obstruction (ACPO): A rare but serious complication following caesarean section." I want to thank the editorial team, especially Maria Emerson, for their prompt review of the manuscript, quick responses to queries, and overall support. Yours sincerely Dr. Victor Olagundoye.

Dear Ashley Rosa, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews. Many thanks for publishing this manuscript after I lost confidence the editors were most helpful, more than other journals Best wishes from, Susan Anne Smith, PhD. Australian Breastfeeding Association.

Dear Agrippa Hilda, Editorial Coordinator, Journal of Neuroscience and Neurological Surgery. The entire process including article submission, review, revision, and publication was extremely easy. The journal editor was prompt and helpful, and the reviewers contributed to the quality of the paper. Thank you so much! Eric Nussbaum, MD

Dr Hala Al Shaikh This is to acknowledge that the peer review process for the article ’ A Novel Gnrh1 Gene Mutation in Four Omani Male Siblings, Presentation and Management ’ sent to the International Journal of Clinical Case Reports and Reviews was quick and smooth. The editorial office was prompt with easy communication.

Dear Erin Aust, Editorial Coordinator, Journal of General Medicine and Clinical Practice. We are pleased to share our experience with the “Journal of General Medicine and Clinical Practice”, following the successful publication of our article. The peer review process was thorough and constructive, helping to improve the clarity and quality of the manuscript. We are especially thankful to Ms. Erin Aust, the Editorial Coordinator, for her prompt communication and continuous support throughout the process. Her professionalism ensured a smooth and efficient publication experience. The journal upholds high editorial standards, and we highly recommend it to fellow researchers seeking a credible platform for their work. Best wishes By, Dr. Rakhi Mishra.

Dear Jessica Magne, Editorial Coordinator, Clinical Cardiology and Cardiovascular Interventions, Auctores Publishing LLC. The peer review process of the journal of Clinical Cardiology and Cardiovascular Interventions was excellent and fast, as was the support of the editorial office and the quality of the journal. Kind regards Walter F. Riesen Prof. Dr. Dr. h.c. Walter F. Riesen.

Dear Ashley Rosa, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews, Auctores Publishing LLC. Thank you for publishing our article, Exploring Clozapine's Efficacy in Managing Aggression: A Multiple Single-Case Study in Forensic Psychiatry in the international journal of clinical case reports and reviews. We found the peer review process very professional and efficient. The comments were constructive, and the whole process was efficient. On behalf of the co-authors, I would like to thank you for publishing this article. With regards, Dr. Jelle R. Lettinga.

Dear Clarissa Eric, Editorial Coordinator, Journal of Clinical Case Reports and Studies, I would like to express my deep admiration for the exceptional professionalism demonstrated by your journal. I am thoroughly impressed by the speed of the editorial process, the substantive and insightful reviews, and the meticulous preparation of the manuscript for publication. Additionally, I greatly appreciate the courteous and immediate responses from your editorial office to all my inquiries. Best Regards, Dariusz Ziora

Dear Chrystine Mejia, Editorial Coordinator, Journal of Neurodegeneration and Neurorehabilitation, Auctores Publishing LLC, We would like to thank the editorial team for the smooth and high-quality communication leading up to the publication of our article in the Journal of Neurodegeneration and Neurorehabilitation. The reviewers have extensive knowledge in the field, and their relevant questions helped to add value to our publication. Kind regards, Dr. Ravi Shrivastava.

Dear Clarissa Eric, Editorial Coordinator, Journal of Clinical Case Reports and Studies, Auctores Publishing LLC, USA Office: +1-(302)-520-2644. I would like to express my sincere appreciation for the efficient and professional handling of my case report by the ‘Journal of Clinical Case Reports and Studies’. The peer review process was not only fast but also highly constructive—the reviewers’ comments were clear, relevant, and greatly helped me improve the quality and clarity of my manuscript. I also received excellent support from the editorial office throughout the process. Communication was smooth and timely, and I felt well guided at every stage, from submission to publication. The overall quality and rigor of the journal are truly commendable. I am pleased to have published my work with Journal of Clinical Case Reports and Studies, and I look forward to future opportunities for collaboration. Sincerely, Aline Tollet, UCLouvain.

Dear Ms. Mayra Duenas, Editorial Coordinator, International Journal of Clinical Case Reports and Reviews. “The International Journal of Clinical Case Reports and Reviews represented the “ideal house” to share with the research community a first experience with the use of the Simeox device for speech rehabilitation. High scientific reputation and attractive website communication were first determinants for the selection of this Journal, and the following submission process exceeded expectations: fast but highly professional peer review, great support by the editorial office, elegant graphic layout. Exactly what a dynamic research team - also composed by allied professionals - needs!" From, Chiara Beccaluva, PT - Italy.

Dear Maria Emerson, Editorial Coordinator, we have deeply appreciated the professionalism demonstrated by the International Journal of Clinical Case Reports and Reviews. The reviewers have extensive knowledge of our field and have been very efficient and fast in supporting the process. I am really looking forward to further collaboration. Thanks. Best regards, Dr. Claudio Ligresti

Dear Chrystine Mejia, Editorial Coordinator, Journal of Neurodegeneration and Neurorehabilitation. “The peer review process was efficient and constructive, and the editorial office provided excellent communication and support throughout. The journal ensures scientific rigor and high editorial standards, while also offering a smooth and timely publication process. We sincerely appreciate the work of the editorial team in facilitating the dissemination of innovative approaches such as the Bonori Method.” Best regards, Dr. Matteo Bonori.

I recommend without hesitation submitting relevant papers on medical decision making to the International Journal of Clinical Case Reports and Reviews. I am very grateful to the editorial staff. Maria Emerson was a pleasure to communicate with. The time from submission to publication was an extremely short 3 weeks. The editorial staff submitted the paper to three reviewers. Two of the reviewers commented positively on the value of publishing the paper. The editorial staff quickly recognized the third reviewer’s comments as an unjust attempt to reject the paper. I revised the paper as recommended by the first two reviewers.

Dear Maria Emerson, Editorial Coordinator, Journal of Clinical Research and Reports. Thank you for publishing our case report: "Clinical Case of Effective Fetal Stem Cells Treatment in a Patient with Autism Spectrum Disorder" within the "Journal of Clinical Research and Reports" being submitted by the team of EmCell doctors from Kyiv, Ukraine. We much appreciate a professional and transparent peer-review process from Auctores. All research Doctors are so grateful to your Editorial Office and Auctores Publishing support! I amiably wish our article publication maintained a top quality of your International Scientific Journal. My best wishes for a prosperity of the Journal of Clinical Research and Reports. Hope our scientific relationship and cooperation will remain long lasting. Thank you very much indeed. Kind regards, Dr. Andriy Sinelnyk Cell Therapy Center EmCell

Dear Editorial Team, Clinical Cardiology and Cardiovascular Interventions. It was truly a rewarding experience to work with the journal “Clinical Cardiology and Cardiovascular Interventions”. The peer review process was insightful and encouraging, helping us refine our work to a higher standard. The editorial office offered exceptional support with prompt and thoughtful communication. I highly value the journal’s role in promoting scientific advancement and am honored to be part of it. Best regards, Meng-Jou Lee, MD, Department of Anesthesiology, National Taiwan University Hospital.

Dear Editorial Team, Journal-Clinical Cardiology and Cardiovascular Interventions, “Publishing my article with Clinical Cardiology and Cardiovascular Interventions has been a highly positive experience. The peer-review process was rigorous yet supportive, offering valuable feedback that strengthened my work. The editorial team demonstrated exceptional professionalism, prompt communication, and a genuine commitment to maintaining the highest scientific standards. I am very pleased with the publication quality and proud to be associated with such a reputable journal.” Warm regards, Dr. Mahmoud Kamal Moustafa Ahmed

Dear Maria Emerson, Editorial Coordinator of ‘International Journal of Clinical Case Reports and Reviews’, I appreciate the opportunity to publish my article with your journal. The editorial office provided clear communication during the submission and review process, and I found the overall experience professional and constructive. Best regards, Elena Salvatore.

Dear Mayra Duenas, Editorial Coordinator of ‘International Journal of Clinical Case Reports and Reviews Herewith I confirm an optimal peer review process and a great support of the editorial office of the present journal

Dear Editorial Team, Clinical Cardiology and Cardiovascular Interventions. I am really grateful for the peers review; their feedback gave me the opportunity to reflect on the message and impact of my work and to ameliorate the article. The editors did a great job in addition by encouraging me to continue with the process of publishing.

Dear Cecilia Lilly, Editorial Coordinator, Endocrinology and Disorders, Thank you so much for your quick response regarding reviewing and all process till publishing our manuscript entitled: Prevalence of Pre-Diabetes and its Associated Risk Factors Among Nile College Students, Sudan. Best regards, Dr Mamoun Magzoub.

International Journal of Clinical Case Reports and Reviews is a high quality journal that has a clear and concise submission process. The peer review process was comprehensive and constructive. Support from the editorial office was excellent, since the administrative staff were responsive. The journal provides a fast and timely publication timeline.

Dear Maria Emerson, Editorial Coordinator of International Journal of Clinical Case Reports and Reviews, What distinguishes International Journal of Clinical Case Report and Review is not only the scientific rigor of its publications, but the intellectual climate in which research is evaluated. The submission process is refreshingly free of unnecessary formal barriers and bureaucratic rituals that often complicate academic publishing without adding real value. The peer-review system is demanding yet constructive, guided by genuine scientific dialogue rather than hierarchical or authoritarian attitudes. Reviewers act as collaborators in improving the manuscript, not as gatekeepers imposing arbitrary standards. This journal offers a rare balance: high methodological standards combined with a respectful, transparent, and supportive editorial approach. In an era where publishing can feel more burdensome than research itself, this platform restores the original purpose of peer review — to refine ideas, not to obstruct them Prof. Perlat Kapisyzi, FCCP PULMONOLOGIST AND THORACIC IMAGING.

Dear Grace Pierce, International Journal of Clinical Case Reports and Reviews I appreciate the opportunity to review for Auctore Journal, as the overall editorial process was smooth, transparent and professionally managed. This journal maintains high scientific standards and ensures timely communications with authors, which is truly commendable. I would like to express my special thanks to editor Grace Pierce for his constant guidance, promt responses, and supportive coordination throughout the review process. I am also greatful to Eleanor Bailey from the finance department for her clear communication and efficient handling of all administrative matters. Overall, my experience with Auctore Journal has been highly positive and rewarding. Best regards, Sabita sinha

Dear Mayra Duenas, Editorial Coordinator of the journal IJCCR, I write here a little on my experience as an author submitting to the International Journal of Clinical Case Reports and Reviews (IJCCR). This was my first submission to IJCCR and my manuscript was inherently an outsider’s effort. It attempted to broadly identify and then make some sense of life’s under-appreciated mysteries. I initially had responded to a request for possible submissions. I then contacted IJCCR with a tentative topic for a manuscript. They quickly got back with an approval for the submission, but with a particular requirement that it be medically relevant. I then put together a manuscript and submitted it. After the usual back-and-forth over forms and formality, the manuscript was sent off for reviews. Within 2 weeks I got back 4 reviews which were both helpful and also surprising. Surprising in that the topic was somewhat foreign to medical literature. My subsequent updates in response to the reviewer comments went smoothly and in short order I had a series of proofs to evaluate. All in all, the whole publication process seemed outstanding. It was both helpful in terms of the paper’s content and also in terms of its efficient and friendly communications. Thank you all very much. Sincerely, Ted Christopher, Rochester, NY.